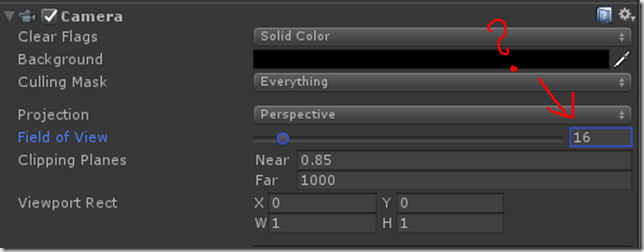

I was working on a HoloLens project when I noticed, as I do about 2 or 3 times during every HoloLens project, that I didn’t know what the Field of View property of the default camera does in a HoloLens app. I always see it out of the corner of my eye when I have the Unity IDE open. The HoloToolkit camera configuration tool automatically sets it to 16. I’m not sure why. (h/t Jesse McCulloch pointed me to an HTK thread that provides more background on how the 16 value came about.)

So I finally decided to test this out for myself. In a regular Unity app, changing the number of degrees in the angular field of view will increase the amount of things that the camera can see, but in turn will make everything smaller. The concept comes from regular camera lenses and is related to the notion of a camera’s focal length, as demonstrated in the fit-inducing (but highly illustrative) animated gif below.

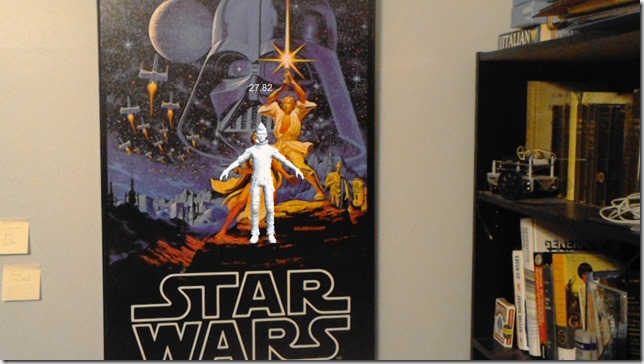

I built a quick app with the default Ethan character and placed a 3D Text element over him that checks the camera’s Field of View property on every update.

public class updateFOV : MonoBehaviour {

private TextMesh _mesh;

private GameObject stuff;

void Awake()

{

_mesh = GetComponent<TextMesh>();

}

// Use this for initialization

void Start () {

}

// Update is called once per frame

void Update () {

_mesh.text = System.Math.Round( Camera.main.fieldOfView, 2).ToString();

}

}

Then I added a Keyword Manager from the HoloToolkit to handle changing the angular FOV of the camera dynamically.

public void IncreaseFOV()

{

Camera.main.fieldOfView = Camera.main.fieldOfView + 1;

} public void DecreaseFOV()

{

Camera.main.fieldOfView = Camera.main.fieldOfView - 1;

} public void ResetFOV()

{

Camera.main.ResetFieldOfView();

}

When I ran the app in my HoloLens, the the fov reader started showing “17.82” instead of “16”. This must be the vertical FOV of the HoloLens – something else I’ve often wondered about. Assuming a 16:9 aspect ration, this gives a horizontal FOV of “31.68”, which is really close to what Oliver Kreylos guessed way back in 2015.

The next step was to increase the Field of View using my voice commands. There were two possible outcomes: either the Unity app would somehow override the natural FOV of the HoloLens and actually distort my view, making the Ethan model smaller as the FOV increased, or the app would just ignore whatever I did to the Main Camera’s FieldOfView property.

The second thing happened. As I increased the Field Of View property from “17.82” to “27.82”, there was no change in the way the character was projected. HoloLens ignores that setting.

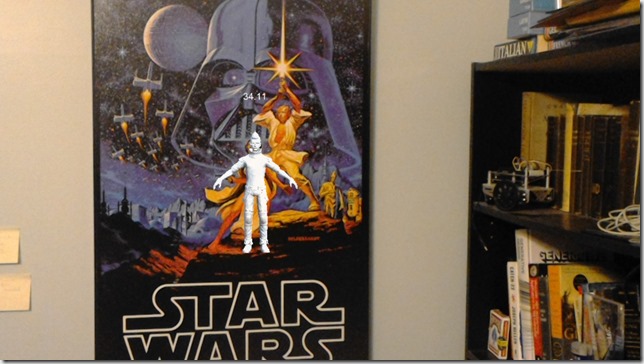

Something strange did happen, though, after I called the ResetFieldOfView method on the Main Camera and tried to take a picture. After resetting, the FOV Reader began retrieving the true value of the FOV again. When I tried to take a picture of this, though, the FOV jumped up to “34.11”, then dropped back to “17.82”.

This, I would assume, is the vertical FOV of the locatable camera (RGB camera) on the front of the HoloLens when taking a normal picture. Assuming again a 16:9 aspect ratio, this would provide a “60.64” horizontal angular FOV. According to the documentation, though, the horizontal FOV should be “67” degrees, which is close but not quite right.

“34.11” is also close to double “17.82” so maybe it has something to do with unsplitting the render sent to each eye? Except that double would actually be “35.64” plus I don’t really know how the stereoscopic rendering pipeline works so – who knows.

In any case, I at least answered the original question that was bothering me – fiddling with that slider next to the Camera’s Field of View property doesn’t really do anything. I need to just ignore it.