One of the interesting potential commercial uses for the Kinect for Windows sensor is as a realtime tool for collecting information about people passing by. The face detection capabilities of the Kinect for Windows SDK lends itself to these scenarios. Just as Google and Facebook currently collect information about your browsing habits, Kinects can be set up in stores and malls to observe you and determine your shopping habits.

There’s just one problem with this. On the face of it, it’s creepy.

To help parse what is happening in these scenarios, there is a sophisticated marketing vocabulary intended to distinguish “creepy” face detection from the useful and helpful kind.

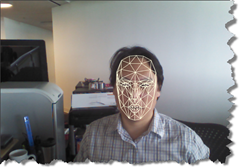

First of all, face detection on its own does little more than detect that there is a face in front of the camera. The face detection algorithm may go even further and break down parts of the face into a coordinate system. Even this, however, does not turn a particular face into an token that can be indexed and compared against other faces.

Turning an impression of a face into some sort of hash takes us to the next level and becomes face recognition rather than merely detection. But even here there is parsing to be done. Anonymous face recognition seeks to determine generic information about a face rather than specific, identifying information. Anonymous face recognition provides data about a person’s age and gender – information that is terribly useful to retail chains.

Consider that today, the main way retailers collect this information is by placing a URL at the bottom of a customer’s receipt and asking them to visit the site and provide this sort of information when the customer returns home. The fulfillment rate on this strategy is obviously horrible.

Being able to collect these information unobtrusively would allow retailers to better understand how inventory should be shifted around seasonally and regionally to provide customers with the sorts of retail items they are interested in. Power drills or perfume? The Kinect can help with these stocking questions.

But have we gotten beyond the creepy factor with anonymous face recognition? It actually depends on where you are. In Asia, there is a high tolerance for this sort of surveillance. In Europe, it would clearly be seen as creepy. North America, on the other hand, is somewhere between Europe and Asia on privacy issues. Anonymous face recognition is non-creepy if customers are provided with a clear benefit from it – just as they don’t mind having ads delivered to their browsers as long as they know that getting ads makes other services free.

Finally, identity face recognition in retail would allow custom experiences like the virtual ad delivery system portrayed in the mall scene from The Minority Report. Currently, this is still considered very creepy.

At work, I’ve had the opportunity to work with NEC, IBM and other vendors on the second kind of face recognition. The surprising thing is that getting anonymous face recognition working correctly is much harder than getting full face recognition working. It requires a lot of probabilistic logic as well as a huge database of faces to get any sort of accuracy when it comes to demographics. Even gender is surprisingly difficult.

Identity face recognition, on the other hand, while challenging, is something you can have in your living room if you have an XBox and a Kinect hooked up to it. This sort of face recognition is used to log players automatically into their consoles and can even distinguish different members of the same family (for engineers developing facial recognition software, it is an irritating quirk of fate that people who look alike also tend to live in the same house).

If you would like to try identity face recognition out, you can try out the Luxand Face SDK. Luxand provides a 30-day trial license which I tried out a few months ago. The code samples are fairly good. While Luxand does not natively support Kinect development, it is fairly straightforward to turn data in the Kinect’s rgb stream into images which can then be compared against other images using Luxand.

I used Luxand’s SDK to compare anyone standing in front of the Kinect sensor with a series of photos I had saved. It worked fairly well, but unfortunately only if one stood directly in front of the sensor and about a foot or two in front of it (which wasn’t quite what we needed at the time). The heart of the code is provided below. It simply takes color images from Kinect and compares it against a directory of photos to see if a match can be found. It could be used as part of a system for unlocking a computer when the proper user stands in front of it (though you can probably think of better uses – just try to avoid being creepy).

void _sensor_ColorFrameReady(object sender

, ColorImageFrameReadyEventArgs e)

{

using (var frame = e.OpenColorImageFrame())

{

var image = frame.ToBitmap();

this.image2.Source = image.ToBitmapSource();

LookForMatch(image);

}

}

private bool LookForMatch(System.Drawing.Bitmap currentImage)

{

if (currentImage == null)

return false;

IntPtr hBitmap = currentImage.GetHbitmap();

try

{

FSDK.CImage image = new FSDK.CImage(hBitmap);

FSDK.SetFaceDetectionParameters(false, false, 100);

FSDK.SetFaceDetectionThreshold(3);

FSDK.TFacePosition facePosition = image.DetectFace();

if (facePosition.w != 0)

{

FaceTemplate template = new FaceTemplate();

template.templateData =

ExtractFaceTemplateDataFromImage(image);

bool match = false;

FaceTemplate t1 = new FaceTemplate();

FaceTemplate t2 = new FaceTemplate();

float best_match = 0.0f;

float similarity = 0.0f;

foreach (FaceTemplate t in faceTemplates)

{

t1 = t;

FSDK.MatchFaces(ref template.templateData

, ref t1.templateData, ref similarity);

float threshold = 0.0f;

FSDK.GetMatchingThresholdAtFAR(0.01f

, ref threshold);

if (similarity > best_match)

{

this.textBlock1.Text = similarity.ToString();

best_match = similarity;

t2 = t1;

if (similarity > _targetSimilarity)

match = true;

}

}

if (match && !_isPlaying)

{

return true;

}

else

{

return false;

}

}

else

return false;

}

finally

{

DeleteObject(hBitmap);

currentImage.Dispose();

}

}

private byte[] ExtractFaceTemplateDataFromImage(FSDK.CImage cimg)

{

byte[] ret = null;

Luxand.FSDK.TPoint[] facialFeatures;

var facePosition = cimg.DetectFace();

if (0 == facePosition.w)

{

}

else

{

bool eyesDetected = false;

try

{

facialFeatures =

cimg.DetectEyesInRegion(ref facePosition);

eyesDetected = true;

}

catch (Exception ex)

{

return cimg.GetFaceTemplateInRegion(ref facePosition);

}

if (eyesDetected)

{

ret =

cimg.GetFaceTemplateUsingEyes(ref facialFeatures);

}

else

{

ret = cimg.GetFaceTemplateInRegion(ref facePosition);

}

}

return ret;

cimg.Dispose();

}