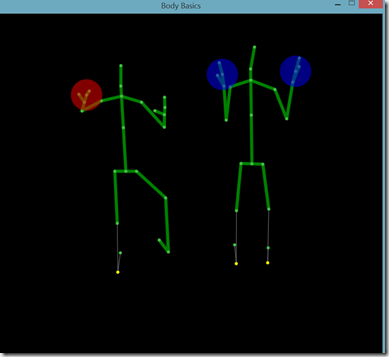

One of the great strengths of the original Kinect sensor was the community that gathered around it almost by happenstance. The same thing is currently happening with the Kinect for Windows v2 – even though the non-XBox version of the hardware is still yet to be released. Going into this v2 release, Microsoft took the prescient stance of reaching out to creative coders, researchers and digital agency types (that’s me) to give them pre-release versions of the hardware to start playing with.

Here are just a few of the things they’ve come up with:

wieden-kennedy/Cinder-Kinect2 – Stephen Schieberl’s Kinect v2 wrapper for Cinderlib

englandrp/Kinect2-Data-Transmitter – a Unity3D plugin for Kinect v2

rfilkov/kinect2-unity-example-with-ms-sdk – another Unity3D plugin for Kinect v2 (also, I believe, using the Data Transmitter strategy)

OpenKinect/libfreenect2 – Josh Blake, Theo Watson, et. al.’s open source drivers for Kinect v2 (in progress, but this will allow it to run on operating systems other than Windows 8 – for instance, on a Mac)

https://github.com/MarcusKohnert/Kinect.ReactiveV2 – a reactive library for Kinect v2

https://github.com/DevHwan/K4Wv2OpenCVModule – OpenCV bridge for Kinect v2

http://k4wv2heartrate.codeplex.com/ – Dwight Goins’ sample implementation of heart rate detection using Kinect v2

… and then there are twice as many in the works I’ve heard about through the grapevine.

The walled garden approach to software doesn’t work anymore and the Microsoft Kinect for Windows team seems to have embraced that in a big way. Not only are people experimenting with the new hardware but they are even making their code publicly available – free as in beer type available – in order to foster the community.

This is a philosophical stance that in some ways harkens back to one of Bill Gates’ early intuitions when he was building the Microsoft Corporation. At some point, he realized that he couldn’t be the smartest person in the room forever. What he could do, though, was to gather the best people he could find and drive them to be their best. He would contribute by clearing the roadblocks and guiding these people toward his goals. This, more or less, was also how Steve Jobs went about adding value to his company and to contemporary culture.

The community currently building up around Kinect v2 is like that but with a difference. The goal isn’t to lead anyone in a particular direction. Instead, the objective is to open up tools / toys to allow people to discover their own goals. Each community member contributes to something bigger than herself by making it possible for other people to do something new and original – whether this turns out to be an app, an art installation, a better way of shopping, an improved layout for visualizing spreadsheets – whatever.

So what’s so bad about walled gardens?

Quite simply, they stifle innovation. The Microsoft I’d grown used to in the double naughts was all about best practices and guidelines and “components” and sealed classes.

Ultimately, Microsoft did everything it could to minimize support calls. Developers were given a certain way to do things – whether this was a good way or not – and if they went off the reservation (sorry, left the walled garden) they were typically on their own: no callbacks from MS and a lot of abuse on support forums asking ‘wtf are you doing that for?’

And I can understand all that — support calls suck – but the end result of this approach was that innovation started occurring more and more outside of Microsoft platforms. Microsoft, in turn, became a ‘use case’ culture. Instead of opening up their APIs like everyone else was doing, their most common response to requests was ‘what’s your use case’ followed by ‘we’ll get back to you on that.’

The logic of this was very simple. Microsoft in the 00’s was about standardization of programming practices. If you’re the sort of person who wants to innovate, however, you don’t want to do the ‘standard’ – by definition you don’t want to do what everyone else is doing. So you looked for platforms with open APIs and tried to find ways to do things with the APIs that no one else was doing, i.e., you hacked those APIs.

And Microsoft, traditionally, hasn’t liked people using their products in ways they are not intended to be used – they haven’t liked hacking.

The original Kinect sensor changed all that. It took a moment, but as videos started showing up all over the place showing people using a hacked driver to read the Kinect sensor streams, the Grinch’s heart grew three sizes that day. MS was getting instant street cred by simply letting people do what they were doing anyways and giving a thumbs up to it. Overnight, Microsoft was once again recognized as an innovative company (they always have been, really, but that wasn’t the public perception).

Which is why v2 of libfreenect is so exciting. It’s a project that will, ultimately, allow you to use the Kinect on a Mac.

To put things into context, PrimeSense (the provider of the depth technology behind Kinect v1) got bought out by Apple last year. PrimeSense’s alternative, open source library + drivers for Kinect, OpenNI, was suddenly put in jeopardy and an announcement was circulated that the OpenNI site was coming down in April, 2014. So…

The anti-Microsoft is currently bringing OpenNI inside its walled garden. Meanwhile Microsoft is providing devices to the people writing libfreenect, which will allow people to use Kinect devices outside of Microsoft’s not-so-walled garden.

How do you like them Apples?