Last week saw the announcement of the long awaited OpenCV 3.0 release, the open source computer vision library originally developed by Intel that allows hackers and artists to analyze images in fun, fascinating and sometimes useful ways. It is an amazing library when combined with a sophisticated camera like the Kinect 2.0 sensor. The one downside is that you typically need to know how to work in C++ to make it work for you.

This is where EmguCV comes in. Emgu is a .NET wrapper library for OpenCV that allows you to use some of the power of OpenCV on .NET platforms like WPF and WinForms. Furthermore, all it takes to make it work with the Kinect is a few conversion functions that I will show you in the post.

Emgu gotchas

The first trick is just doing all the correct things to get Emgu working for you. Because it is a wrapper around C++ classes, there are some not so straightforward things you need to remember to do.

1. First of all, Emgu downloads as an executable that extracts all its files to your C: drive. This is actually convenient since it makes sharing code and writing instructions immensely easier.

2. Any CPU isn’t going to cut it when setting up your project. You will need to specify your target CPU architecture since C++ isn’t as flexible about this as .NET is. Also, remember where your project’s executable is being compiled to. For instance, an x64 debug build gets compiled to the folder bin/x64/Debug, etc.

3. You need to grab the correct OpenCV C++ library files and drop them in the appropriate target project file for your project. Basically, when you run a program using Emgu, your executable expects to find the OpenCV libraries in its root directory. There are lots of ways to do this such as setting up pre-compile directives to copy the necessary files. The easiest way, though, is to just go to the right folder, e.g. C:\Emgu\emgucv-windows-universal-cuda 2.4.10.1940\bin\x64, copy everything in there and paste it into the correct project folder, e.g. bin/x64/Debug. If you do a straightforward copy/paste, just remember not to Clean your project or Rebuild your project since either action will delete all the content from the target folder.

4. Last step is the easiest. Reference the necessary Emgu libraries. The two base ones are Emgu.CV.dll and Emgu.Util.dll. I like to copy these files into a project subdirectory called libs and use relative paths for referencing the dlls, but you probably have your own preferred way, too.

WPF and Kinect SDK 2.0

I’m going to show you how to work with Emgu and Kinect in a WPF project. The main difficulty is simply converting between image types that Kinect knows and image types that are native to Emgu. I like to do these conversions using extension methods. I provided these extensions in my first book Beginning Kinect Programming about the Kinect 1 and will basically just be stealing from myself here.

I assume you already know the basics of setting up a simple Kinect program in WPF. In MainWindow.xaml, just add an image to the root grid and call it rgb:

<Grid>

<Image x:Name="rgb"></Image>

</Grid>

Make sure you have a reference to the Microsoft.Kinect 2.0 dll and put your Kinect initialization code in your code behind:

KinectSensor _sensor;

ColorFrameReader _rgbReader;

private void InitKinect()

{

_sensor = KinectSensor.GetDefault();

_rgbReader = _sensor.ColorFrameSource.OpenReader();

_rgbReader.FrameArrived += rgbReader_FrameArrived;

_sensor.Open();

}

public MainWindow()

{

InitializeComponent();

InitKinect();

}

protected override void OnClosing(System.ComponentModel.CancelEventArgs e)

{

if (_rgbReader != null)

{

_rgbReader.Dispose();

_rgbReader = null;

}

if (_sensor != null)

{

_sensor.Close();

_sensor = null;

}

base.OnClosing(e);

}

Kinect SDK 2.0 and Emgu

You will now just need the extension methods for converting between Bitmaps, Bitmapsources, and IImages. In order to make this work, your project will additionally need to reference the System.Drawing dll:

static class extensions

{

[DllImport("gdi32")]

private static extern int DeleteObject(IntPtr o);

public static Bitmap ToBitmap(this byte[] data, int width, int height

, System.Drawing.Imaging.PixelFormat format = System.Drawing.Imaging.PixelFormat.Format32bppRgb)

{

var bitmap = new Bitmap(width, height, format);

var bitmapData = bitmap.LockBits(

new System.Drawing.Rectangle(0, 0, bitmap.Width, bitmap.Height),

ImageLockMode.WriteOnly,

bitmap.PixelFormat);

Marshal.Copy(data, 0, bitmapData.Scan0, data.Length);

bitmap.UnlockBits(bitmapData);

return bitmap;

}

public static Bitmap ToBitmap(this ColorFrame frame)

{

if (frame == null || frame.FrameDescription.LengthInPixels == 0)

return null;

var width = frame.FrameDescription.Width;

var height = frame.FrameDescription.Height;

var data = new byte[width * height * PixelFormats.Bgra32.BitsPerPixel / 8];

frame.CopyConvertedFrameDataToArray(data, ColorImageFormat.Bgra);

return data.ToBitmap(width, height);

}

public static BitmapSource ToBitmapSource(this Bitmap bitmap)

{

if (bitmap == null) return null;

IntPtr ptr = bitmap.GetHbitmap();

var source = System.Windows.Interop.Imaging.CreateBitmapSourceFromHBitmap(

ptr,

IntPtr.Zero,

Int32Rect.Empty,

System.Windows.Media.Imaging.BitmapSizeOptions.FromEmptyOptions());

DeleteObject(ptr);

return source;

}

public static Image<TColor, TDepth> ToOpenCVImage<TColor, TDepth>(this ColorFrame image)

where TColor : struct, IColor

where TDepth : new()

{

var bitmap = image.ToBitmap();

return new Image<TColor, TDepth>(bitmap);

}

public static Image<TColor, TDepth> ToOpenCVImage<TColor, TDepth>(this Bitmap bitmap)

where TColor : struct, IColor

where TDepth : new()

{

return new Image<TColor, TDepth>(bitmap);

}

public static BitmapSource ToBitmapSource(this IImage image)

{

var source = image.Bitmap.ToBitmapSource();

return source;

}

}

Kinect SDK 2.0 and Computer Vision

Here is some basic code to use these extension methods to extract an Emgu IImage type from the ColorFrame object each time Kinect sends you one and then convert the IImage back into a BitmapSource object:

void rgbReader_FrameArrived(object sender, ColorFrameArrivedEventArgs e)

{

using (var frame = e.FrameReference.AcquireFrame())

{

if (frame != null)

{

var format = PixelFormats.Bgra32;

var width = frame.FrameDescription.Width;

var height = frame.FrameDescription.Height;

var bitmap = frame.ToBitmap();

var image = bitmap.ToOpenCVImage<Bgr,byte>();

//do something here with the IImage

//end doing something

var source = image.ToBitmapSource();

this.rgb.Source = source;

}

}

}

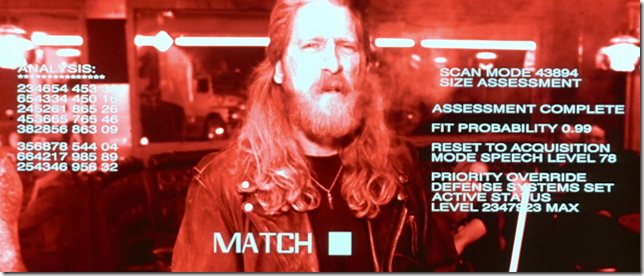

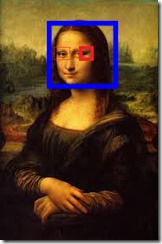

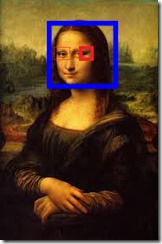

You should now be able to plug in any of the sample code provided with Emgu to get some cool CV going. As an example, in the code below I use the Haarcascade algorithms to identify heads and eyes in the Kinect video stream. I’m sampling the data every 10 frames because the Kinect is sending 30 frames a second while the Haarcascade code can take as long as 80ms to process. Here’s what the code would look like:

int frameCount = 0;

List<System.Drawing.Rectangle> faces;

List<System.Drawing.Rectangle> eyes;

void rgbReader_FrameArrived(object sender, ColorFrameArrivedEventArgs e)

{

using (var frame = e.FrameReference.AcquireFrame())

{

if (frame != null)

{

var format = PixelFormats.Bgra32;

var width = frame.FrameDescription.Width;

var height = frame.FrameDescription.Height;

var bitmap = frame.ToBitmap();

var image = bitmap.ToOpenCVImage<Bgr,byte>();

//do something here with the IImage

int frameSkip = 10;

//every 10 frames

if (++frameCount == frameSkip)

{

long detectionTime;

faces = new List<System.Drawing.Rectangle>();

eyes = new List<System.Drawing.Rectangle>();

DetectFace.Detect(image, "haarcascade_frontalface_default.xml", "haarcascade_eye.xml", faces, eyes, out detectionTime);

frameCount = 0;

}

if (faces != null)

{

foreach (System.Drawing.Rectangle face in faces)

image.Draw(face, new Bgr(System.Drawing.Color.Red), 2);

foreach (System.Drawing.Rectangle eye in eyes)

image.Draw(eye, new Bgr(System.Drawing.Color.Blue), 2);

}

//end doing something

var source = image.ToBitmapSource();

this.rgb.Source = source;

}

}

}

_thumb.jpg)