[hardware specs were released this week. This post is now updated to reflect the final specs.]

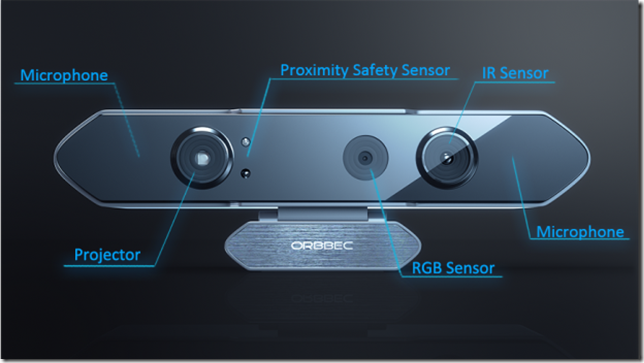

In addition to a sophisticated AR display, the Micosoft HoloLens contains a wide array of sensors that constantly collect data about the user’s external and internal environments. These sensors are used to synchronize the augmented reality world with the real world as well as respond to commands. The HoloLens’s sensor technology can be thought of as a combination of two streams of research: one from the evolution of the Microsoft Kinect and the other from developments in virtual reality positioning technology. While what follows is almost entirely just well-informed guesswork, we can have a fair degree of confidence in these guesses based on what is already known publicly about the tech behind the Kinect and well documented VR gear like the Oculus Rift.

While this article will provide a broad survey of the HoloLens sensor hardware, the reader can go deeper into this topic on her own through resources like the book Beginning Kinect Programming by James Ashley and Jarrett Webb, Oliver Kreylos’s brilliant Doc-OK blog, and the perpetually enlightening Oculus blog.

Let’s begin with a list of the sensors believed to be housed in the HoloLens HMD:

- Gyroscope

- Magnetometer

- Accelerometer

Internal facing eye tracking cameras (?)-

Ambient Light Detector

(?) - Microphone Array (4 (?) mics)

Depth sensorsGrayscale Cameras (4)- RGB cameras (1)

- Depth sensor (1)

The first three make up an Inertial Measurement Unit often found in head-mounted displays for AR as well as VR. The eye tracker is technology that became commercialized by 3rd parties like Eye Tribe following the release of the Kinect but not previously used in Microsoft hardware – though it isn’t completely clear that there is any sort of eye tracking being used. There is a small sensor at the front that some people assume is an ambient light detector. The last three are similar to technology found in the Kinect.

copyright Adobe Stock

I want to highlight the microphone array first because it was always the least understood and most overlooked feature of Kinect. The microphone array is extremely useful for speech recognition because it can distinguish between vocal commands from the user and ambient noise. Ideally, it should also be able to amplify speech from the user so commands can be heard even over a noisy room. Speech commands will likely be lit up by integrating the mic array with Microsoft’s cloud-based Cortana speech rec technology rather than something like the Microsoft Speech SDK. Depending on how the array is oriented, it may also be able to identify the direction of external sounds. In future iterations of HoloLens, we may be able to marry the microphone array’s directional capabilities with the RGB cameras and face recognition to amplify speech from our friends through the biaural audio speakers built into HoloLens.

copyright Microsoft

Eye tracking cameras are part of a complex mechanism allowing the human gaze to be used in order to manipulate augmented reality menus. When presented with an AR menu, the user can gaze at buttons in the menu in order to highlight them. Selection then occurs either by maintaining the gaze or by introducing an alternative selection mechanism like a hand press – which would in turn use the depth camera combined with hand tracking algorithms. Besides being extremely cool, eye tracking is a NUI solution to a problem many of us have like encountered with the Kinect on devices like Xbox. As responsive as hand tracking can be using a depth camera, it still has lag and jitteriness that makes manipulation of graphical user interface menus tricky. There’s certainly an underlying problem in trying to transpose one interaction paradigm, menu manipulation, into another paradigm based on gestures. Similar issues occur when we try to put interaction paradigms like a keyboard on a touch screen — it can be made to work, but isn’t easy. Eye tracking is a way to remove friction when using menus in augmented reality. It’s fascinating, however, to imagine what else we could use it for in future HoloLens iterations. It can be used to store images and environmental data whenever our gaze dwells for a threshold amount of time on external objects. When we want to recall something we saw during the day, the HoloLens can bring it back to us: that book in the book store, that outfit the guy in the coffee shop was wearing, the name of the street we passed on the way to lunch. As we sleep each night, perhaps these images can be analyzed in the cloud to discover patterns in our daily lives of which we were previously unaware.

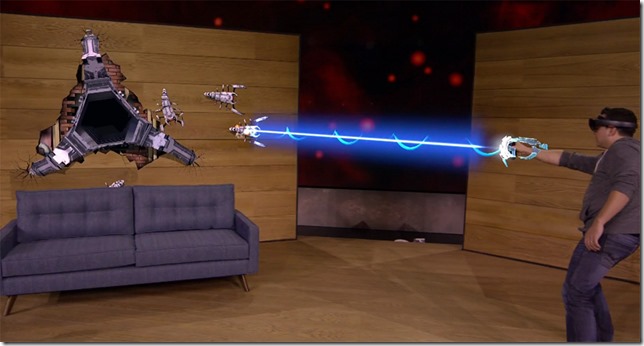

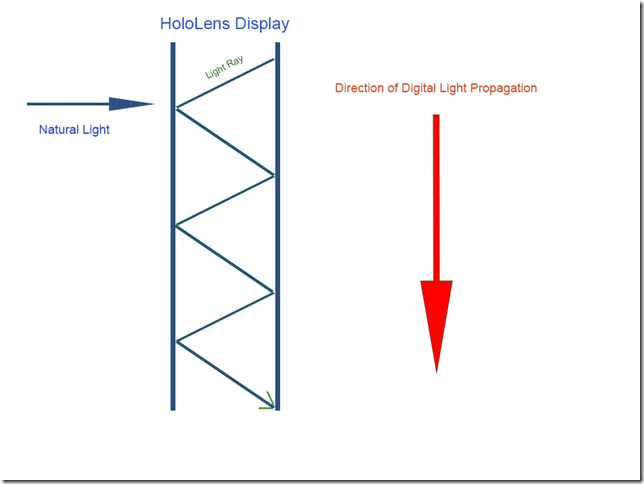

Kinect has a feature called coordinate mapping which allows you to compare pixels from the depth camera and pixels from the color camera. Because the depth camera stream contained information about pixels belonging to human beings and those that did not, the coordinate mapper could be used to identify people in the RGB image. The RGB image in turn could be manipulated to do interesting things with the human-only pixels such as background subtraction and selective application of shaders such that these effects would appear to follow the player around. HoloLens must do something similar but on a vastly grander scale. The HoloLens must map virtual content onto 3D coordinates in the world and make them persist in those locations even as the user twists and turns his head, jumps up and down, and moves freely around the virtual objects that have been placed in the world. Not only must these objects persist, but in order to maintain the illusion of persistence there can be no perceivable lag between user movements and redrawing the virtual objects on the HoloLens’s two stereoscopic displays – perhaps no more than 20 ms of delay.

This is a major problem for both augmented and virtual reality systems. The problem can be broken up into two related issues: orientation tracking and position tracking. Orientation tracking determines where we are looking when wearing a HMD. Position tracking determines where we are located with respect to the external world.

copyright Adobe Stock: Sergey Niven

Orientation tracking is accomplished through a device known as an Inertial Measurement Unit which is made up of a gyroscope, magnetometer and accelerometer. The inertial unit of measure for an Inertial Measurement Unit (see what I did there?) is radians per second (rad/s), which provides the angular velocity of any head movements. Steve LaValle provides an excellent primer on how the data from these sensors are fused together on the Oculus blog. I’ll just provide a digest here as a way to explain how HoloLens is doing roughly the same thing.

The gyroscope is the core head orientation tracking device. It measures angular velocity. Once we have the values for the head at rest, we can repeatedly check the gyroscope to see whether our head has moved and in which direction it has moved. By comparing the velocity of that movement as well as the direction and comparing this to the amount of time that has passed, we can determine how the head is currently oriented compared to its previous orientation. In fact the Oculus does this one thousand times per second and we can assume that HoloLens is collecting data at a similarly furious rate.

Over time, unfortunately, the gyroscope’s data loses precision – this is known as “drift.” The two remaining orientation trackers are used to correct for this drift. The accelerometer performs an unexpected function here by determining the acceleration due to the force of gravity. The accelerometer provides the true direction of “up” (gravity pulls down so the acceleration we feel is actually upward, as in a rocket ship flying directly up) which can be used to correct the gyroscope’s misconstrued impression of the real direction of up. “Up,” unfortunately, doesn’t provide all the correction we need. If you turn your head right and left to make the gesture for “no,” you’ll notice immediately that knowing up in this case tells us nothing about the direction in which your head is facing. In this case, knowing the direction of magnetic north would provide the additional data needed to correct for yaw error – which is why a magnetometer is also a necessary sensor in HoloLens.

copyright Adobe Stock

Even though the IMU, made up of a gyroscope, magnetometer and accelerometer, is great for determining the deltas in head orientation from moment to moment, it doesn’t work so well for determining diffs in head position. For a beautiful demonstration of why this is the case, you can view Oliver Kreylos’s video Pure IMU-Based Positional Tracking is a No-Go. For a very detailed explanation, you should read Head Tracking for the Oculus Rift by Steven LaValle and his colleagues at Oculus.

The Oculus Rift DK2 introduced a secondary camera for positional tracking that sits a few feet from the VR user and detects IR markers on the Oculus HMD. This is known as outside-in positional tracking being the external camera determines the location of the goggles and passes it back to Oculus software. This works well for the Oculus mainly because the Rift is a tethered device. The user sits or stands in a place near to the computer that runs the experience and cannot stray far from there.

There are some alternative approaches to positional tracking which allow for greater freedom of movement. The HTC Vive virtual reality system, for instance, uses two stationary devices in a setup called Lighthouse. Instead of stationary cameras like the Oculus Rift uses, these Lighthouse boxes are stationary emitters of infrared light that the Vive uses to determine it’s position in a room with respect to them. This is sometimes called an inside-out positional tracking solution because the HMD is determining it’s location relative to known external fixed positions.

Google’s Project Tango is another example of inside-out positional tracking that uses the sensors built into handheld devices (smart phones and tablets) in order to add AR and VR functionality to applications. Because these devices aren’t packed into IMUs, they can be laggy. To compensate, Project Tango uses data from onboard device cameras to determine the orientation of the room around the device. These reconstructions are constantly compared against previous reconstructions in order to determine both the device’s position as well as its orientation with respect to the room surfaces around it.

It is widely assumed that HoloLens uses a similar technique to correct for positional drift from the Inertial Measurement Unit. After all, HoloLens has four depth IR grayscale (?) cameras built into it. The IMU, in this supposition, would provide fast but drifty positional data while the combination of data from the four depth grayscale cameras and an RGB cameras provide possibly slower (we’re talking in milliseconds, after all) but much more accurate positional data. Together, this configuration provides inside-out positional tracking that is truly tether-less. This is, in all honestly, a simply amazing feat and almost entirely overlooked in most overviews of the HoloLens.

The secret sauce that integrates camera data into an accurate and fast reconstruction of the world to be used, among other things, for position tracking is called the Holographic Processing Unit – a chip the Microsoft HoloLens team is designing itself. I’ve heard from reliable sources that fragments from Stonehenge are embedded in each chip to make this magic work.

On top of this, the depth sensors, IR cameras, and RGB cameras will likely be accessible as independent data streams that can be used for the same sorts of functionality for which they have been used in Kinect applications over the past four years: art, research, diagnostic, medical, architecture, and gaming. Though not discussed previously, I would hope that complex functionality we have become familiar with from Kinect development like skeleton tracking and raw hand tracking will also be made available to HoloLens developers.

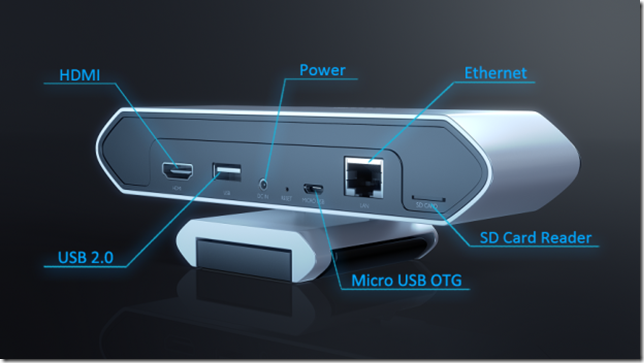

Such a continuity of capabilities and APIs between Kinect and HoloLens, if present, would make it easy to port the thousands of Kinect experiences the creative and software communities have developed over the years leading up to HoloLens. This sort of continuity was, after all, responsible for the explosion of online hacking videos that originally made the Kinect such an object of fascination. The Kinect hardware used a standard USB connector that developers were able to quickly hack and then pass on to –- for the most part –- pre-existing creative applications that used less well known, less available and non-standard depth and RGB cameras. The Kinect connected all these different worlds of enthusiasts by using common parts and common paradigms.

It is my hope and expectation that HoloLens is set on a similar path.

[This post has been updated 11/07/15 following opportunities to make a closer inspection of the hardware while in Redmond, WA. for the MVP Global Summit. Big thanks to the MPC and HoloLens groups as well as the Emerging Experiences MVP program for making this possible.]

[This post has been updated again 3/3/15 following release of final specs.]