Whether you decide to develop for the Apple Vision Pro using the native stack (XCode, SwiftUI, RealityKit) or with Unity 3D stack (Unity, C#, XR SDK, PolySpatial), you will need a mac. XCode doesn’t run on Windows and even the Unity tools for AVP development need to run with the Unity for Mac.

Even more, the specs are fairly specific. You’ll need a silicon chip, not Intel (M1, M2 or M3 chips). You’ll need at least 16 Gigs of RAM and at least a 256 GB hard drive.

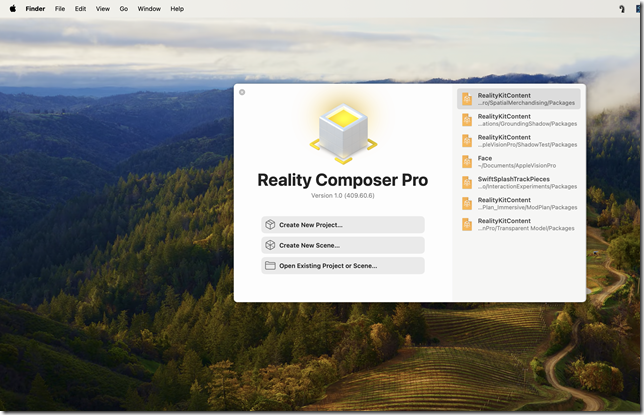

If you are working in the AR/VR/MR/XR/Spatial Computing/HMD space (what a mouthful that has grown into!) it makes sense to learn as much as you can about the tools used to create spatial experiences. And while the Apple native tools like XCode and the Vision Pro simulator are free (though you’ll need a $100/yr Apple dev account), the hardware requirements still remain as a bit of a barrier to learning AVP development – much less publishing to the AVP.

If you want to develop either VR or AR apps for the AVP using Unity, you’ll need to pay an additional $2K a year for a Unity Pro seat on top of everything you already need for native AVP development.

So I wanted to look into the minimum cost just to learn to develop for the Vision Pro.

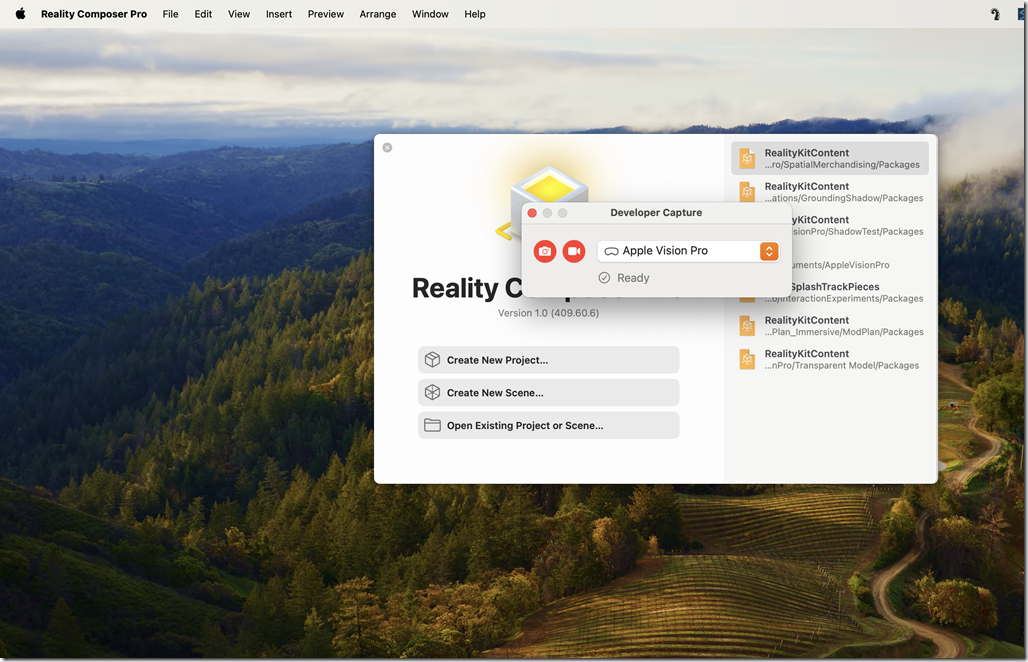

This begins with a $100 Apple developer account. It also assumes that to learn the skills, you don’t necessarily need to spend $3500 for an AVP device. The simulator that comes with XCode is actually very good and supports the ability to simulate most of the AVPs capabilities except for SharePlay. This should be welcome news to the vast number of European, Asian and South American AR/VR devs who won’t have access to headsets for anywhere from six months to a year or more.

So let’s start at $100. The next step is looking into the least expensive hardware options.

Cloud-based

The least expensive, temporary solution to get hardware is to rent time in a cloud solution.

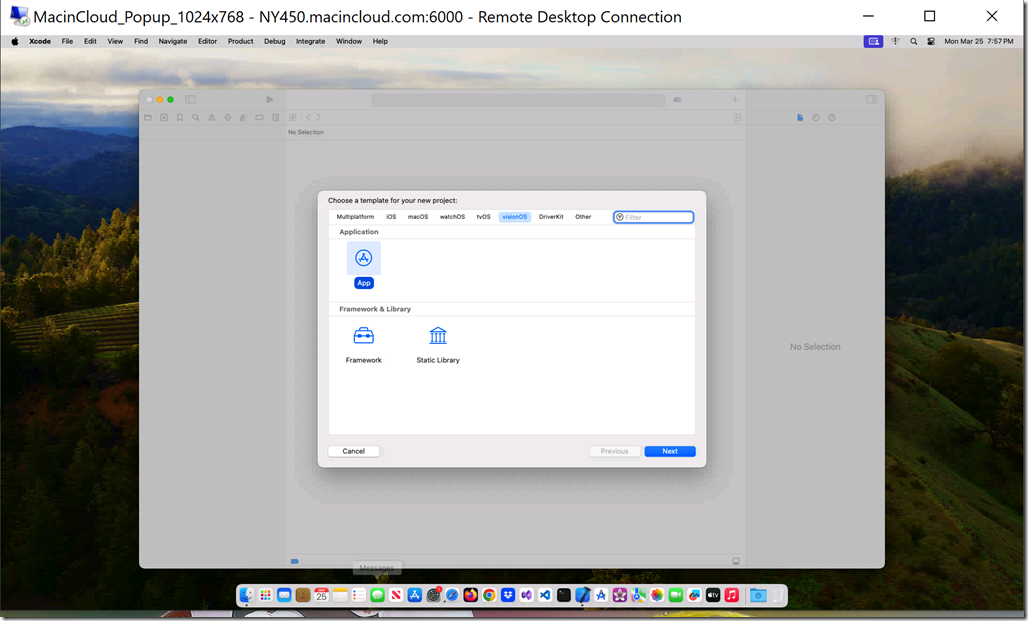

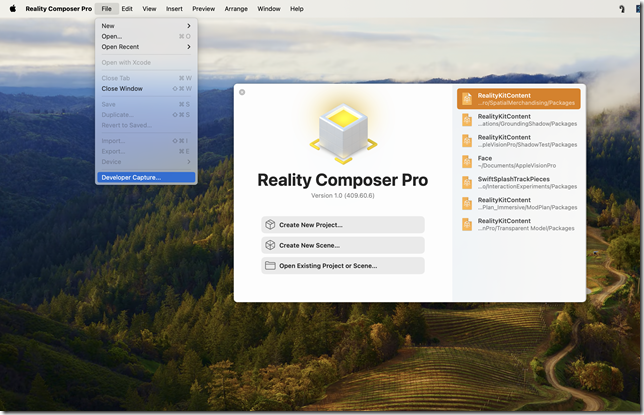

MacInCloud has a VDI solution for Mac minis running an M2 chip with 16 GB of RAM and MacOS Sonoma with XCode 15.2 already on it for $33.50 a month with a 3 hour a day limit. In theory this is a nice solution to start learning AVP development for a few months while you decide if you want to invest more in your hardware.

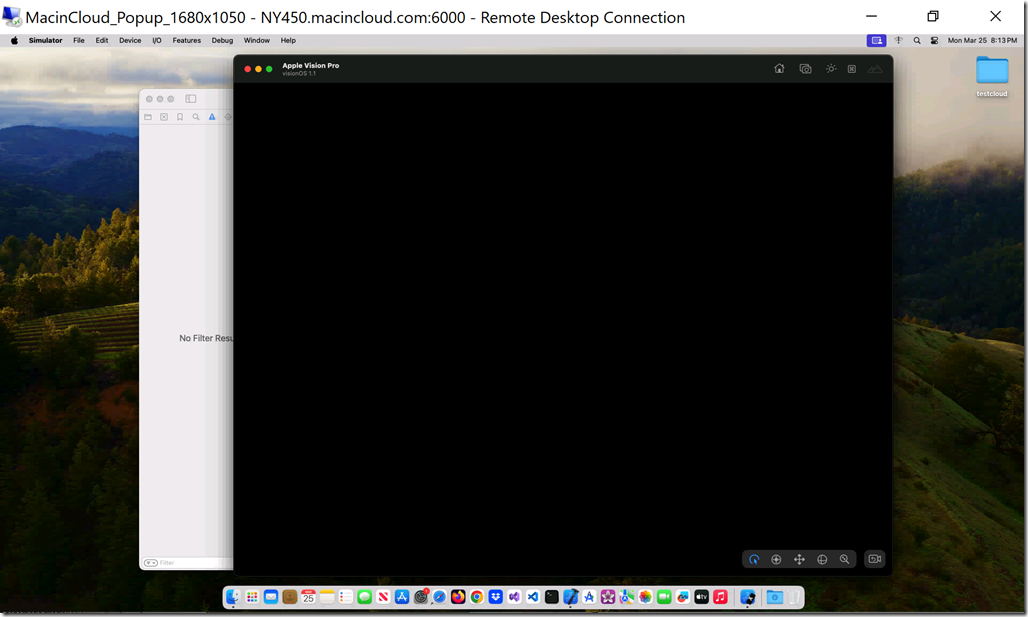

There are some problems with the solution as is, though. First, you are RDPing into a remote machine. If you’ve ever used remote desktop before, you’ll know that there are weird screen resolution issues and, even worse, screen refresh issues with this. It can feel like programming under water. This is why it can only be a temporary solution at best. Next, it includes an older version of XCode – we’re currently up to version 15.3. You can request to have additional or updated software installed, so this is really only a problem of convenience, though it is nice to be able to install and uninstall software when you need to. The good news was that I was able to write a simple AVP project using MacInCloud’s remote mac. The bad news is that the XCode preview window never started rendering and, even worse, the XCode Simulator running VisionOS 1.1 always rendered a black screen.

The rendering problem makes this solution unusable, unfortunately. Once they solve it, though, I think MacInCloud could be a viable solution for learning to develop for the Vision Pro.

New vs Used

If a remote solution isn’t currently viable, then the next step is to figure out what it costs to get a minimum spec piece of hardware on the secondary market.

Currently (March, 2024) a new Mac mini with 16 GB RAM, a 256 GB SSD, a standard M2 chip, a power cord and no monitor, keyboard or mouse, costs $800 or $66.58/mo.

A 13-inch MacBook Air with similar specs runs $400 more. An iMac with an M3 chip is $700 more. An M3 Macbook Pro is $1000 more. A bottom of the line M2 Max Mac Studio with 32 GB RAM and 512 GB SSD is $1100 more.

The cadillac model, for reference, would be a 16-inch MacBook Pro with an M3 Max chip, 96 GB of RAM and a 1 TB SSD for $4299.

________________________________________________________________________________

Apple sells refurbished computers, also. A refurbished 13-inch MacBook Air with M2/16/256 is currently selling for $1,019.

Back Market is a good site for used Macs. I found a 13-inch MacBook Pro there with M1/16/256 for $804 and a similarly spec’d MacBook Air for $725, as well as an M2 MacBook Air for $949. Your milleage may vary.

MacOfAllTrades has a used MacBook Pro M1/16/512 for $700.

MacSales has a used Mac Mini M1/16/256 for $499.

Summary

If you want to learn Apple Vision Pro right now and you are coming from Windows development, your startup cost is going to be around $600:

- $100 Apple Developer Account

- $499 min spec hardware to run XCode 15.3 and the VisionOS simulator

If you are just learning, then the Simulator has all the functionality you need to try out just about anything you can build (again, minus SharePlay and Personas). If you are very bold, you might even be able to publish something to the store to recoup some of your investment.

If you want to develop for the AVP with Unity 3D, your startup costs go up to $2600:

- $2,040 Unity Pro license

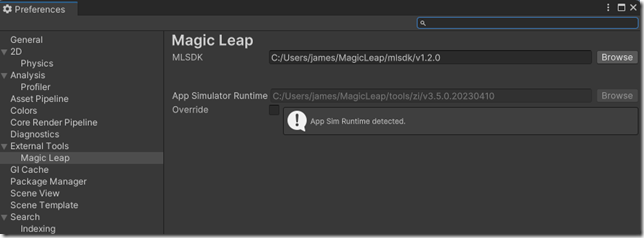

If you are coming from HoloLens/MagicLeap/Meta Quest development, the question here might just be how much you are willing to pay to not have to learn Swift and RealityKit.