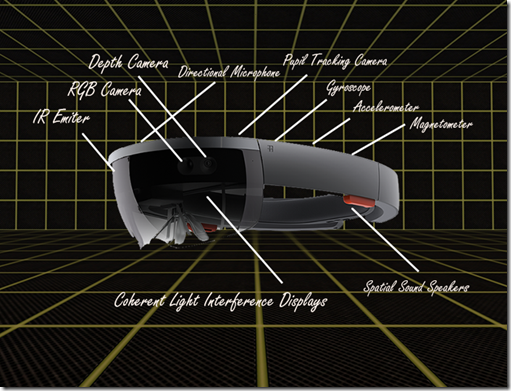

The image above is a best guess at the underlying technology being used in Microsoft’s new HoloLens headset. It’s not even that great a guess since the technology appears to still be in the prototype stage. On the other hand, the product is tied to the Windows 10 release date, so we may be seeing a consumer version – or at the very least a dev version – sometime in the fall.

Here are some things we can surmise about HoloLens:

a) the name may change – HoloLens is a good product name but isn’t quite where we might like it to be, in a league with Kinect, Silverlight or Surface for branding genius. In fact, Surface was such a good name, it was taken from one product group and simply given to another in a strange twist on the build vs buy vs borrow quandary. On the other hand, HoloLens sounds more official than the internal code name, Baraboo — isn’t that a party hippies throw themselves in the desert?

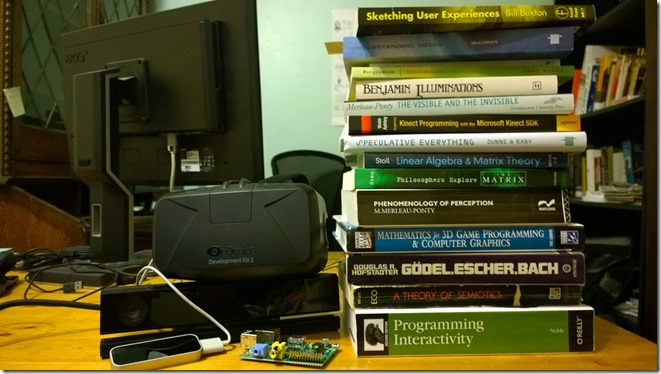

b) this is augmented reality rather than virtual reality. Facebook’s Oculus Rift, which is an immersive fully digital experience, is an example of virtual reality. Other fictional examples include The Oasis from Ernest Cline’s Ready Player One, The Mataverse from Neal Stephenson’s Snow Crash, William Gibson’s Cyberspace and the VR simulation from The Lawnmower Man. Augmented reality involves overlaying digital experience on top of the real world. This can be accomplished using holography, transparent displays, or projectors. A great example of projector based AR is the RoomAlive project by Hrvoje Benko, Eyal Ofek and Andy Wilson at Microsoft Research. HoloLens uses glasses or a head-rig – depending on how generous you feel – to implement AR. Magic Leap – with heavy investment from Google – appears to be doing the same thing. The now dormant Google Glass was neither AR nor VR, but was instead a heads-up display.

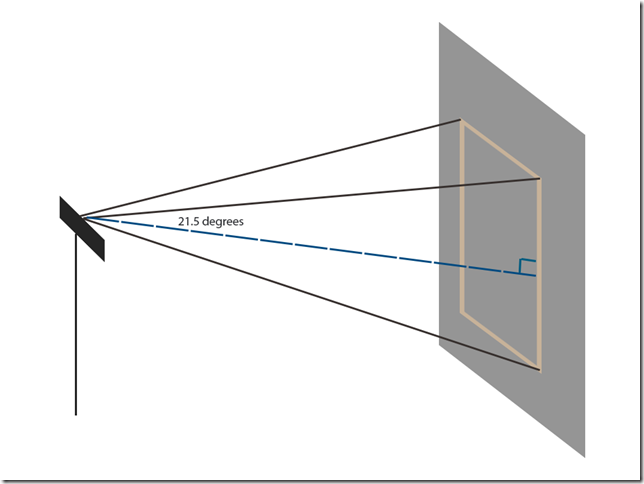

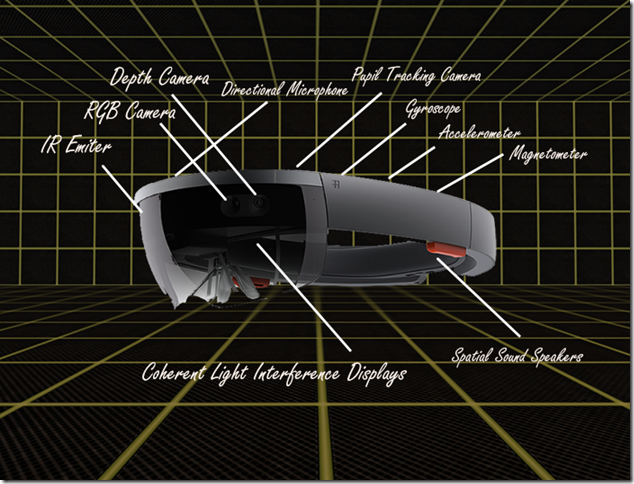

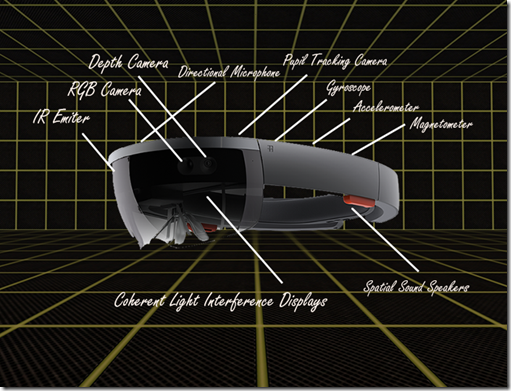

c) HoloLens uses Kinect technology under the plastic covers. While the depth sensor in the Kinect v2 has a field of view of 70 degrees by about 60 degrees, the depth capability in HoloLens is reported to include a field of view of 120 degrees by 120 degrees. This indicates that HoloLens will be using the Time-of-Flight technology used in Kinect v2 rather than the structured light from Kinect v1. This set up requires both an IR emitter as well as a depth camera combined with a sophisticated timing and phase technology to efficiently and relatively inexpensively calculate depth.

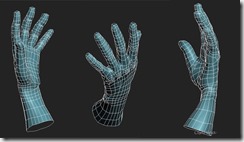

d) the depth camera is being used for multiple purposes. The first is for gesture detection. One of the issues that faced both Oculus and Google Glass was that they were primarily display technologies. But a computer monitor is useless without a keyboard or mouse. Similarly, Oculus and Glass needed decent interaction metaphors. Glass relied primarily on speech commands and tapping and clicking. Oculus had nothing until their recent acquisition of the NimbleVR . NimbleVR provides a depth camera optimized for hand and finger detection over a small range. This can be mounted in front of the Oculus headset. Conceptually, this allows people to use hand gestures and finger manipulations in front of the device. A virtual hand can be created as an affordance in the virtual world of the Oculus display, allowing users to interact with virtual objects and virtual interactive menus in virtro.

The depth sensor in HoloLens would work in a similar way except that instead of a virtual hand as affordance, it’s just your hand. You will use your hand to manipulate digital objects displayed on the AR lenses or to interact with AR menus using gestures.

An interesting question is how many IR sensors are going to be on the HoloLens device. From the pictures that have been released, it looks like we will have a color camera and a depth sensor for each eye, for a total of two depth cameras and two RGB cameras located near the joint between the lenses and the headband.

e) HoloLens is also using depth data for 3d reconstruction of real world surfaces. These surfaces are then used as virtual projection surfaces for digital textures. Finally, the blitted image is displayed on the transparent lenses.

This sort of reconstruction is a common problem in projection mapping scenarios. A great example of applying this sort of reconstruction can be found in the Halloween edition of Microsoft Research’s RoomAlive project. In the first image above, you are seeing the experience from the correct perspective. In the second image, the image is captured from a different perspective than the one that is being projected. From the incorrect perspective, it can be seen that the image is actually being projected on multiple surfaces – the various planes of the chair as well as the wall behind it – but foreshortened digitally and even color corrected to make the image appear cohesive to a viewer sitting at the correct position. One or more Kinects must be used to calibrate the projections appropriately against these multiple surfaces. If you watch the full video, you’ll see that Kinect sensors are used to track the viewer as she moves through the room and the foreshortening / skewing occurs dynamically to adjust to her changing position.

The Minecraft AR experience being used to show the capabilities of HoloLens requires similar techniques. The depth sensor is required not only to calibrate and synchronize the digital display to line up correctly with the table and other planes in the room, but also to constantly adjust the display as the player moves around the room.

f) are the display lenses stereoscopic or holographic? At this point no one is completely sure, though indications are that this is something more than the stereoscopic display technique used in the Oculus Rift. While a stereoscopic display will create the illusion of depth and parallax by creating a different image for each lens, something holographic would actually be creating multiple images per lens and smoothly shifting through them based on the location of each pupil staring through its respective lens and the orientation and position of the player’s head.

One way of achieving this sort of holographic display is to have multiple layers of lenses pressed against each other and using interference shift the light projected into each pupil as the pupil moves. It turns out that the average person’s pupils typically move around rapidly in saccades, mapping and reconstructing images for the brain, even though we do not realize this motion is occurring. Accurately capturing these motions and shifting digital projections appropriately to compensate would create a highly realistic experience typically missing from stereoscopic reconstructions. It is rumored in the industry that Magic Leap is pursuing this type of digital holography.

On the other hand, it has also been reported that HoloLens is equipped with eye-tracking cameras on the inside of the frames, apparently to aid with gestural interactions. It would be extremely interesting if Microsoft’s route to achieving true holographic displays involved eye-tracking combined with a high display refresh rate rather than coherent light interference display technology as many people assume. Or, then again, it could just be stereoscopic displays after all.

g) occlusion is generally considered a problem for interactive experiences. For augmented reality experiences, however, it is a feature. Consider a physical-to-digital interaction in which you use your finger/hand to manipulate a holographic menu. The illusion we want to see is of the hand coming between the player’s eyes and the digital menu. The player’s hand should block and obscure portions of the menu as he interacts with it.

The difficulty with creating this illusion is that the player’s hand isn’t really between the menu and the eyes. Really, the player’s hand is on the far side of the menu, and the menu is being displayed on the HoloLens between the player’s eyes and his hand. Visually, the hologram of the menu will bleed through and appear on top of the hand.

In order to re-establish the illusion of the menu being located on the far side of the hand, we need depth-sensors to accurately map an outline of the hand and arm and then cut a hand and arm shape out of the menu where the hand should be occluding it. This process has to be repeated as the hand moves in real-time and it’s kind of a hard problem.

h) misc sensors : best guess is that in addition to depth sensors, color cameras and eye-tracking cameras, we’ll also get a directional microphone, gyroscope, accelerometer and magnetometer. Some sort of 3D sound has been announced, so it makes sense that there is a directional microphone or microphone array to complement it. This is something that is available on both the Kinect v1 and Kinect v2. The gyroscope, accelerometer and magnetometer are also guesses – but the Oculus hardware has them to track quick head movements, head position and head orientation. It makes sense that HoloLens will need them also.

i) the current form factor looks a little big – bigger than the Magic Leap is supposed to be but smaller than the current Oculus dev units. The goal – really everyone’s goal, from Microsoft to Facebook to Google – is to continue to bring down the size of sensors so we can eventually have heavy glasses rather than light-weight head gear.

j) vampires, infrared sensors and transparent displays are all sensitive to direct sunlight. This consideration can affect the viability of some AR scenarios.

k) like all innovative technologies, the success of HoloLens will depend primarily on what people use it for. the myth of the killer app is probably not very useful anymore, but the notion that you need an app store to sell a device is a generally accepted universal constant. The success of the HoloLens will depend on what developers build for it and what consumers can imagine doing with it.

Top 21 Ideas

Many of these ideas are borrowed from other VR and AR technology. In most cases, HoloLens will simply provide a better way to implement these notions. These ideas come from movies, from art installations, and from many years working at an innovative marketing agency where we prototyped these ideas day in and day out.

1. Shopping

Amazon made one click shopping make sense. Shopping and the psychology of shopping changes when we make it more convenient, effectively turning instant gratification into a marketing strategy. Using HoloLens AR, we can remodel a room with virtual furniture and then purchase all the pieces on an interactive menu floating in the air in front of us when we find the configuration we want. We can try and buy virtual clothes. With a wave of the hand we can stock our pantry, stock our refrigerator … wait, come to think of it, with decent AR, do we even need furniture or clothes anymore?

2. Gaming

IllumiRoom was a Microsoft project that never quite made it to product but was a huge hit on the web. The notion was to extend the XBox One console with projections that reacted to what was occurring in the game but could also extend the visuals of the game into the entire living room. IllumiRoom (which I was fortunate enough to see live the last time I was in Redmond) also uses a Kinect sensor to scan the room in order to calibrate projection mapping onto surfaces like bookshelves, tables and potted plants. As you can guess, this is the same team that came up with RoomAlive. A setup that includes a $1,500 projector and a Kinect is a bit complicated, especially when a similar effect can now be created using a single unit HoloLens.

The HoloLens device could also be used for in-game Heads-Up notifications or even as a second screen. It would make a lot of sense if XBox integration is on the roadmap and would set XBox apart as the clear leader in the console wars.

3. Communication

‘nuff said.

4. Home Automation

Home automation has come a long way and you can now easily turn lights on and off with your smart phone from miles away. Turning your lights on and off from inside your own house may still involve actually touching a light switch. Devices like the Kinect have the limitation that they can only sense a portion of a room at a time. Many ideas have been thrown out to create better gesture recognition sensors for the home, including using wifi signals that go through walls to detect gestures in other rooms. If you were actually wearing a gestural device around with you, this would no longer be a problem. Point at a bulb, make a fist, “put out the light, and then put out the light” to quote the Bard.

5. Education

While cool visuals will make education more interesting, the biggest benefit of HoloLens for education is simple access. Children in rural areas in the US have to travel long distances to achieve a decent education. Around the world, the problem of rural education is even worse. What if educators could be brought to the children instead? This is one of the stated goals of Facebook’s purchase of Oculus Rift and HoloLens can do the same job just as well and probably better.

6. Medical Care

Technology can be used for interesting diagnostic and rehabilitation functions. The depth sensors that come with HoloLens will no doubt be used in these ways eventually. But like education, one of the great problems in medical care right now is access. If we can’t bring the patient to the doctor, let’s bring the GP to the patient and do regular check ups.

7. Holodeck

The RoomAlive project points the way toward building a Holodeck. All we have to do is replace Kinect sensors with HoloLens sensors, projectors with holographic displays, and then try now to break the HoloLens strapped to our heads as we learn Kung Fu.

8. Windows

Have you ever wished you could look out your window and be somewhere else? HoloLens can make that happen. You’ll have to block out natural light by replacing your windows with sheetrock, but after that HoloLens can give you any view you want.

But why stop at windows. You can digitize all your walls if you want, and HoloLens’ depth technology will take care of the rest.

9. Movies and Television

Oculus Rift and Samsung Gear VR have apps that let you watch movies in your own virtual theater. But wouldn’t it be more fun to watch a movie with your entire family? With HoloLens we can all be together on the couch but watch different things. They can watch Barney on the flatscreen while I watch an overlay of Meet the Press superimposed on the screen. Then again, with HoloLens maybe I could replace my expensive 60” plasma TV with a piece of cardboard and just watch that instead.

10. Therapy

It’s commonly accepted that white noise and muted colors relax us. Controlling our environment helps us to regulate our inner states. Behavioral psychology is based on such ideas and the father of behavioral psychology, B. F. Skinner, even created the Skinner box to research these ideas – though I personally prefer Wilhelm Reich’s Orgone box. With 3D audio and lenses that extend over most of your field of view, HoloLens can recreate just the right experience to block out the world after a busy day and just relax. shhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhh.

11. Concerts

Once a year in the Nevada desert a magical music festival is held called Baraboo. Or, I don’t know, maybe it’s held in Tennessee. In any case, getting to festivals is really hard and usually involves being around people who aren’t wearing enough deodorant, large crowds, and buying plastic bottles of water for $20. Wouldn’t it be great to have an immersive festival experience without all the things that get in the way. Of course, there are those who believe that all that other stuff is essential to the experience. They can still go and be part of the background for me.

12. Avatars

Gamification is a huge buzzword at digital marketing agencies. Undergirding the hype is the realization that our digital and RL experiences overlap and that it sometimes can be hard to find the seams. Vernor Vinge’s 2001 novella Fast Times at Fairmont High draws out the implications of this merging of physical and digital realities and the potential for the constant self reinvention we are used to on the internet bleeding over into the real world. Why continue with the name your parents gave you when you can live your AR life as ByteMst3r9999? Why be constrained by your biological appearance when you can project your inner self through a fun and bespoke avatar representation? AR can ensure that other people only see you the way that you want them to.

13. Blocking Other People’s Avatars

The flip side of an AR society invested in an avatar culture is the ability to block people who are griefing us. Parents can call a time out and block their children for ten minutes periods. Husbands can block their wives. We could all start blocking our co-workers on occasion. For serious offenses, people face permanent blocking as a legal sanction for bad behavior by the game masters of our augmented reality world. The concept was brilliantly played out in the recent Black Mirror Christmas special starring Jon Hamm. If you haven’t been keeping up with Black Mirror, go check it out. I’ll wait for you to finish.

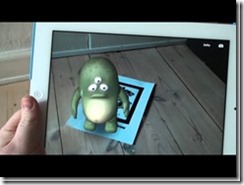

14. Augmented Media

Augmented reality today typically involves a smart phone or tablet and and a fiducial marker. The fiducial is a tag or bar code that indicates to the app on your phone where an AR experience should be placed. Typically you’ll find the fiducial in a magazine ad that encourages you to download an app to see the hidden augmented content. It’s novel and fun. The problem involves having to hold up your tablet or phone for a period of time just to see what is sometimes a disappointing experience. It would be much more interesting to have these augmented media experiences always available. HoloLens can be always on and searching for these types of augmented experiences as you read the latest New Yorker or Wired. They needn’t be confined to ads, either. Why can’t the whole magazine be filled with AR content? And why stop at magazines? Comic books with additional AR content would change the genre in fascinating ways (Marvel’s online version already offers something like this, though rudimentary). And then imagine opening a popup book where all the popups are augmented, a children’s book where all the illustrations are animated, or a textbook that changes on the fly and updates itself every year with the latest relevant information – a kindle on steroids. You can read about that possibility in Neal Stephenson’s Diamond Age – only available in non-augmented formats for now.

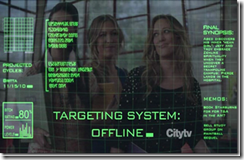

15. Terminator Vision

This is what we thought Google Glass was supposed to provide – but then it didn’t. That’s okay. With vision recognition software and the two RGB cameras on HoloLens, you’ll never forget a name again. Instant information will appear telling you about your surroundings. Maps and directions will appear when you gesture for them. Shopping associates will no longer have to wing it when engaging with customers. Instead, HoloLens will provide them with conversation cues and decision trees that will help the associate close the sale efficiently and effectively. Dates will be more interesting as you pull up the publicly available medical, education and legal histories of anyone who is with you at dinner. And of course, with the heartbeat monitor and ability to detect small fluctuations in skin tone, no one will ever be able to lie to you again, making salary negotiations and buying a car a snap.

16. Wealth Management

With instant tracking of the DOW, S&P and NASDAQ along with a gestural interface that goes wherever you go, you can become a day trader extraordinaire. Lose and gain thousands of dollars with a flick of your finger.

17. Clippit

Call him Jarvis if it helps. Some sort of AI personal assistant has always been in the cards. Immersive AR will make it a reality.

18. Impossible UIs

I don’t watch movies the way other people do. Whenever I go to see a futuristic movie, I try to figure out how to recreate the fantasy user experiences portrayed in it. Minority Report is an easy one – it’s a large area display, possibly projection, with Kinect-like gestural sensors. The communication device from the Total Recall reboot is a transparent screen and either capacitive touch or more likely a color camera doing blob recognition. The 3D touchscreen from Pacific Rim has always had me stumped. Possibly some sort of leap motion device attached to a Pepper’s Ghost display? The one fantasy UX I could never figure out until I saw HoloLens is the “Orison” computer made up of floating disks in Cloud Atlas. The Orison screens are clearly digital devices in a physical space – beautiful, elegant, and the sort of intuitive UX for which we should strive. Until now, they would have been impossible to recreate. Now, I’m just waiting to get my hands on a dev device to try to make working Orison displays.

19. Wiki World

Wiki World is a simple extension of terminator vision. Facts floating before your eyes, always available, always on. No one will ever have to look up the correct spelling for a word again or strain his memory for a sports statistic. What movie was that actor in? Is grouper ethical to eat? Is Javascript an object-oriented language? Wiki world will make memorization obsolete and obviate all arguments – well, except for edit wars between Wikipedia editors, of course.

20. Belief Circles

Belief circles are a concept from Vernor Vinge’s Hugo award winning novel Rainbows End. Augmented reality lends itself to self-organizing communal affiliations that will create inter-subjective realities that are shared. Some people will share sci-fi themes. Others might go the MMO route and share a fantasy setting with a fictional history, origin story, guilds and factions. Others will prefer football. Some will share a common religion or political vision. All of these belief circles will overlap and interpenetrate. Taking advantage of these self-generating belief circles for content creation and marketing will open up new opportunities for freelance creatives and entrepreneurs over the next ten years.

21. Theater of Memory

Giulio Camillo’s memory theater belongs to a long tradition of mnemonic technology going back to Roman times and used by orators and lawyers to memorize long speeches. The scholar Frances Yates argued that it also belonged to another Renaissance tradition of neoplatonic magic that has since been superseded by science in the same way that memory technology has been superseded by books, magazines and computers. What Frances Yates – and after her Ioan Couliano – tried to show, however, was that in dismissing these obsolete modes of understanding the world, we also lose access to a deeper, metaphoric and humanistic way of seeing the world and are the poorer for it. The theater of memory is like Feng Shui – also discredited – in that it assumes that the way we construct our surroundings also affects our inner lives and that there is a sympathetic relationship between the macrocosm of our environment and the microcosm of our emotional lives. I’m sounding too new agey so I’ll just stop now. I will be creating my own digital theater of memory as soon as I can, though, as a personal project just for me.