It is 60 days after the day I thought the U.S. presidential election would have been settled … and yet. Intellectually, I recognize the outrageousness of the situation, based on the Constitution, based on my high school civics lessons, and based on my memories of the 2000 presidential election between Bush and Gore when everyone felt that any wrong move or overreach back then would have threatened the stability of the republic and the rule of law.

At the same time I have become inured to the cray-cray and as I listen today to recordings of President Trump’s corrupt, self-serving call to Georgia Secretary of State Raffensperger, I find that my intellectual recognition that norms are being broken (the norm that we trust the democratic system, the norm that we should all abide by the rules as they are written , the norm that we should assiduously avoid tampering with ‘the process’ in any way) is not accompanied by the familiar gut uneasiness that signals to humans that norms have been disturbed. That thing that makes up “common sense”, a unanimity between thought and feeling, is missing for me due to four and more years of gaslighting.

When common sense breaks down in this way, there are generally two possible causes. Either you have gone crazy or everyone else has. Like Ingrid Bergman in that George Cukor film, our first instinct is to look for a Joseph Cotton to reassure us that we are right and Charles Boyer is wrong. What always causes me dread about that movie, though, is the notion that things wouldn’t have gone so well had Ingrid Bergman not been gorgeous and drawn Cotton’s gaze and concern.

In another film from a parallel universe, Cotton might have ignored Bergman, and she would have withdrawn from the world, into herself, and pursued a hobby she had full control over, like crochet, or woodworking, or cosplaying. Many do.

Over the past two decades, nerdiness has shifted from being a character flaw into a virtue, from something tacitly acknowledged into a lifestyle to be pursued. The key characteristic of “nerdiness” is the willingness to allow a passion to bloom into an obsession to the point of wanting to know every trivial and quadrivial aspect of a subject. True nerdiness is achieved when we take a matter just that bit too far, when friendships are broken over opinions concerning the Star Wars prequels, or when marriages are split over the classification of a print font.

The loss of the sensus communis can also mark the point where mere thought becomes philosophical. The hallmark of philosophical reflection is that moment when the familiar suddenly becomes unfamiliar and then demands our gaze with new fascination, like Ingrid Bergman suddenly drawing Joseph Cotton’s attention. For Heidegger this was the uncanniness of the world. For Husserl it was the epoche in which we bring into question the givenness of the world. And for Plato it is the desire for one’s lover, which one transfers to beauty in general, and finally to Truth itself.

Philosophers as a rule take things too far. They say forbidden things. They draw unexpected conclusions. They examine all the nooks and crannies of thought, exhaustively, to reach the conclusions they reach, often to the boredom of their audience. They were nerds before we knew what nerds were.

Even in the world of philosophy, however, there are books and ideas that used to be considered too important to overlook but too nerdy to be made central to the discipline. Instead, they have existed on the margins of philosophy waiting for a moment when the Zeitgeist was ready to receive them.

Here are five works of speculative philosophy whose time, I believe has come.

Simulacra and Simulations by Jean Baudrillard – This book describes virtual reality, a bit like William Gibson did with Neuromancer, before it was really a thing. The Wachowskis cite it as an inspiration for The Matrix and even put phrases from this work in one of Morpheus’s monologues. It is blessedly a short work that captures the essence of our virtual world today from a distance of almost half a century (it was written in 1983). No one should be working in tech today without understanding what Baudrillard meant by “the desert of the real.”

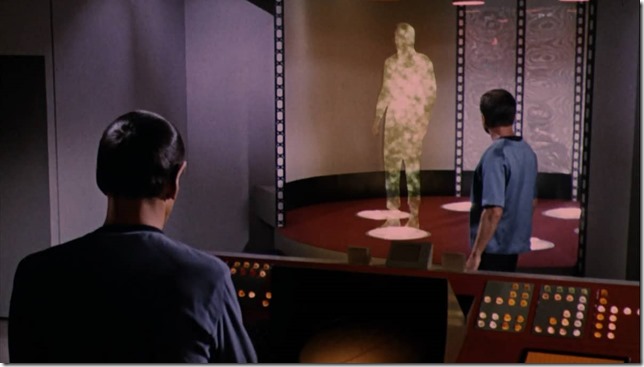

Reasons and Persons by Derek Parfit – Parfit took apart the notion of identity using thought experiments drawn from science fiction. One of his most striking arguments, introduced in Part Three of his work, in a section called Simple Teletransportation and the Branch-Line Case, Parfit posits a machine that allows speed-of-light travel by scanning a person into data, sending that data to another planet, and then reconstituting that data as matter to recreate the original person. Of course, we have to destroy the original copy during this process of teletransportation. Parfit toys with our intuitions of what it means to be a person in order to arrive at philosophical gold. If the reader is troubled by this scenario of murder and cloning cum teleportation, Parfit is able to point out that this is what we go through in our lives. How much of the matter we were born with is still a part of our physical bodies? Little to none?

For the coup de gras, one can apply the lessons of teletransportation to address our pointless fear of death. What is death, after all, but a journey through the teleporter without a known terminus?

The Conscious Mind by David J Chalmers – Just as the 4th century BCE saw a flourishing of philosophy and science in Greece, or the 16th century saw an explosion of literary invention in England, in the 1990’s Australia become the home of the most innovative works on the Philosophy of Mind in the world. Out of that period of wild genius David J Chalmers came out against the general trend driven by Daniel Dennett and Paul Churchland that denied the reality of consciousness. Chalmers, on the other hand, made the case through exacting arguments that consciousness is not only real, but is a fundamental property of the universe, alongside spatiality and temporality.

Hegel and the Metaphysics of Absolute Negativity by Brady Bowman – Since Dale Carnegie’s important work reforming the habits of white collar labor, positive thinking has been the ethos of professional life. The Marxian threat of alienated labor is eliminated by refusing to acknowledge the possibility of alienation in the corporate managerial class. Just as movie Galadriel tells us that “history became legend, legend became myth”, the power of positive thinking became a tenet of faith, then a method of prosperous Biblical exegesis, and finally a secret.

Do you ever get tired of mindless positivism? What if the underlying engine of the universe turns out not to be positive thinking but absolute negativity? And what if this can be proven through Hegel’s advanced dialectical logic? How much would you pay for a secret like that?

The Emperor’s New Mind by Roger Penrose – Penrose was a brilliant mathematical physicist who unleashed his learned background to the problem of human consciousness. Do physics and quantum physics in particular confirm or reject our theories about the human soul? I’ve always loved this book because Penrose comes up with a solution to human consciousness in a somewhat unphilosophical way – which made many philosophers nervous. The crux of his argument for the place of mind in a quantum universe is the size horizon of some features of the human brain. Ultimately, I think, Penrose provides a way to reconcile Kantian metaphysics with modern cutting edge physics and biology in a way that works – or that at least is consistent and the ground for the possibility of Kantianism.

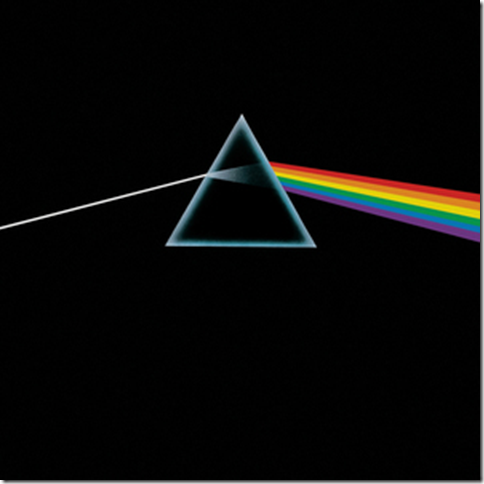

Honorable mention: Darkside by Tom Stoppard – if you have been watching The Good Place then you should be familiar with The Trolley Problem, a thought experiment used to tease our ethical intuitions and commitments. What could make The Trolley Problem even better? What if it is incorporated into a radio play by one of our greatest living English dramatists, performed to the tracks of Pink Floyd’s Dark Side of the Moon, and acted out by Bill Nighy (The Hitchhiker’s Guide to the Galaxy), Rufus Sewell (Dark City) and Iwan Rheon (Game of Thrones).