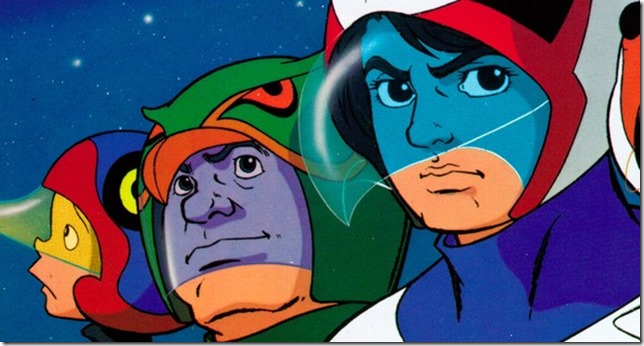

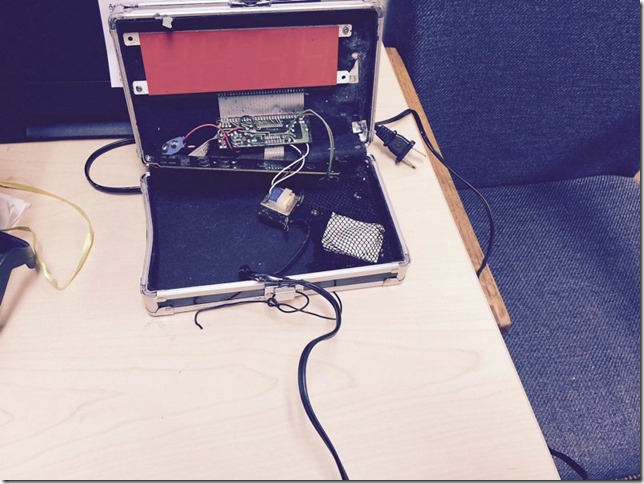

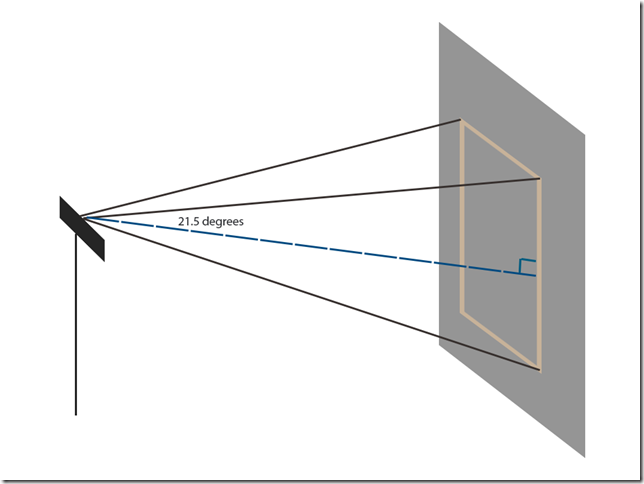

This is the picture of the homemade clock Ahmed Mohamed brought to his Irving, Texas high school. Apparently no one ever mistook it for a bomb, but they did suspect that it was made to look like a bomb and so they dragged the hapless boy off in handcuffs and suspended him for three days.

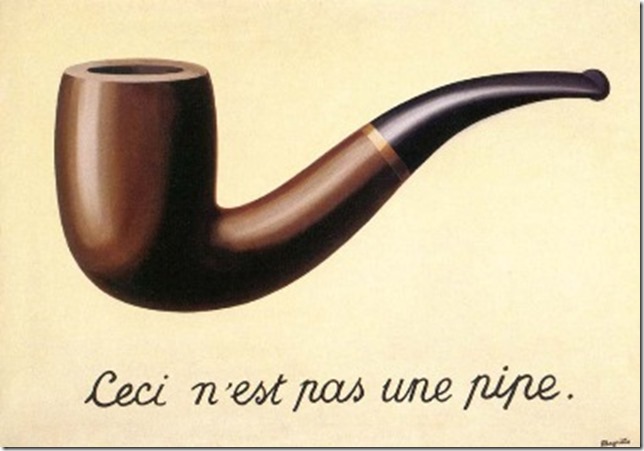

This is a strange case of perception versus reality in which the virtual bomb was never mistaken for a real bomb. Instead, what was identified was the fact that it was, in fact, only a bomb virtually and, as with all things virtual, therefore required some sort of explanation.

The common sympathetic explanation is that this isn’t a picture of a virtual bomb at all but rather a picture of a homemade clock. Ahmed recounts that he made the clock, in maker fashion, in order to show an engineering teacher because he had done robotics in middle school and wanted to get into a similar program in high school. Homemade clocks, of course, don’t require an explanation since they aren’t virtually anything other than themselves.

It turns out, however, that the picture at the top does not show a homemade maker clock. Various engineering types have examined the images and determined that it is in fact a disassembled clock from the 80’s.

The telling aspect is the DC power cord which doesn’t actually get used in homemade projects. Instead, anyone working with arduino projects typically (pretty much always) uses AA batteries. The clock components have also been tracked back to their original source, however, so the evidence seems pretty solid.

The photo at the top shows not a virtual bomb nor a homemade clock but, in fact, a virtual homemade clock. That is, it was made to look like a homemade clock but was mistakenly believed to be something made to look like a homemade bomb.

[As a disclaimer about intentions, which is necessary because getting on the wrong side of this gets people in trouble, I don’t know Ahmed’s intentions and while I’m a fan of free speech I can’t say I actually believe in free speech having worked in marketing and I think Ahmed Mohammed looks absolutely adorable in his NASA t-shirt and I have no desire to be placed in company with those other assholes who have shown that this is not a real homemade clock but rather a reassembled 80’s clock and therefore question Ahmed’s motives whereas I refuse to try to get into a high schooler’s head, having two of my own and knowing what a scary place that can be … something, something, something … and while I can’t wholeheartedly support every tweet made by Richard Dawkins and have at times even felt in mild disagreement with things he and others have tweeted on twitter I will say that I find his book The Selfish Gene a really good read … etc, etc, … and for good measure fuck you FoxNews.]

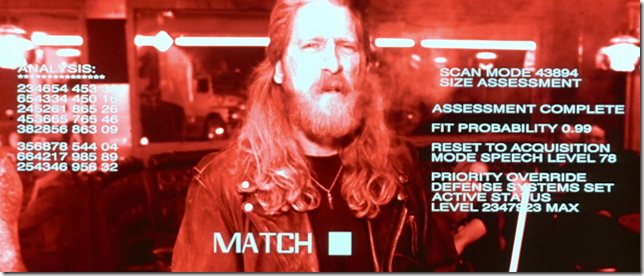

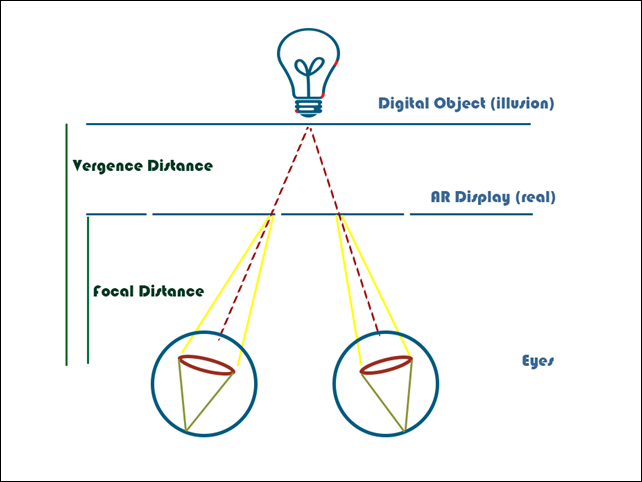

The salient thing for me is that we all implicitly know that a real bomb isn’t supposed to look like a bomb. The authorities at Ahmed’s high school knew that immediately. Bombs are supposed to look like shoes or harmless tourist knickknacks. If you think it looks like a bomb, it obviously isn’t. So what does it mean to look like a bomb (to be virtually a bomb) but not be an actual bomb?

I covered similar territory once before in a virtual exhibit called les fruits dangereux and at the time concluded that virtual objects, like post-modern novels, involve bricolage and the combining of disparate elements in unexpected ways. For instance combining phones, electrical tape and fruit or combining clock parts and pencil cases. Disrupting categorical thinking at a very basic level makes people – especially authority people – suspicious and unhappy.

Which gets us back to racism which is apparently what has happened to Ahmed Mohammed who was led out of school in handcuffs in front of his peers – and we’re talking high school! and he wasn’t asking to be called “McLovin.” It’s pretty cruel stuff. The fear of racial mixing (socially or biologically) always raises it’s head and comes from the same desire to categorize people and things into bento box compartments. The great fear is that we start to acknowledge that we live in a continuum of types rather than distinct categories of people, races and objects. In the modern age, mass production makes all consumer objects uniform in a way that artisanal objects never were while census forms do the same for people.

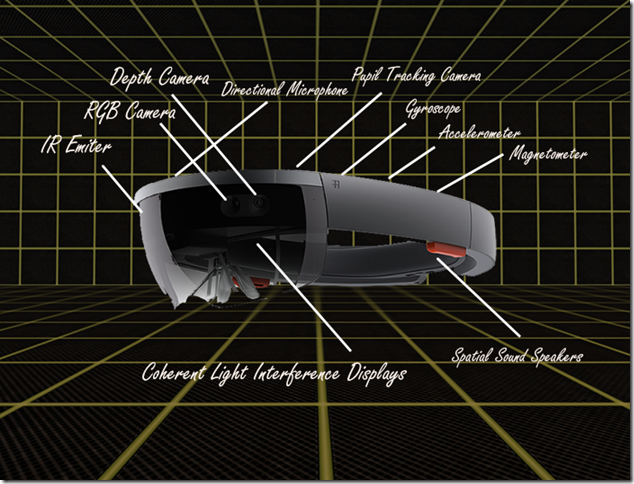

Virtual reality will start by copying real world objects in a safe way. As with digital design, it will start with isomorphism to make people feel safe and comfortable. As people become comfortable, bricolage will take hold simply because, in a digital world rather than a commoditized/commodified world, mashups are easy. Irony and a bit of subversiveness will lead to bricolage with purpose as we find people’s fantasies lead them to combine digital elements in new and unexpected ways.

We can all predict augmented and virtual ways to press a digital button or flick through a digital menu projected in front of us in order to get a virtual weather forecast. Those are the sorts of experiences that just make people bored with augmented reality vision statements.

The true promise of virtual reality and augmented reality is that they will break down our racial, social and commodity thinking. Mixed-reality has the potential to drastically change our social reality. How do social experiences change when the color of a person’s avatar tells you nothing real about them, when our social affordances no longer provide clues or shortcuts to understanding other people? In a virtual world, accents and the shoes people wear no longer tell us anything about their educational background or social status. Instead of a hierarchical system of discrete social values, we’ll live in a digital continuum.

That’s the sort of augmented reality future I’m looking forward to.

The important point in the Ahmed Mohammed case, of course, is that you shouldn’t arrest a teenager for not making a bomb.

_thumb.jpg)