People continue to ask what the difference is between the Kinect for XBox One and the Kinect for Windows v2. I had to wait to unveil the Thanksgiving miracle to my children, but now I have some pictures to illustrate the differences.

On the sensors distributed through the developer preview program (thank you Microsoft!) there is a sticker along the top covering up the XBox embossing on the left. There is an additional sticker covering up the XBox logo on the front of the device. The power/data cables that comes off of the two sensors look a bit like tails. Like the body of the sensors, the tails are also identical. These sensors plug directly into the XBox One. To plug them into a PC, you need an additional adapter that draws power from a power cord and sends data to a USB 3.0 cable and passes both of these through the special plugs shown in the picture below.

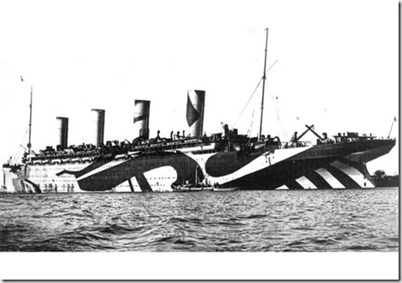

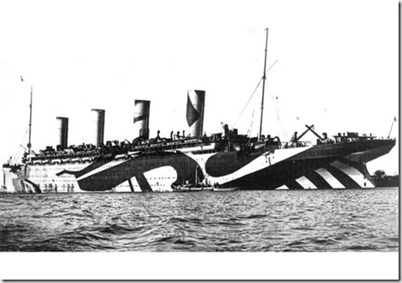

So what’s with those stickers? It’s a pattern called razzle dazzle (and sometimes razzmatazz). In World War I, it was used as a form of camouflage for war ships by the British navy. It’s purpose is to confuse rather than conceal — to obfuscate rather than occlude.

Microsoft has been using it not only for the Kinect for Windows devices but also in developer units of the XBox One and controllers that went out six months ago.

This is a technique of obfuscation popular with auto manufacturers who need to test their vehicles but do not want competitors or media to know exactly what they are working on. At the same time, automakers do use this peculiar pattern to let their competitors and the media know that they are, in fact, working on something.

What we are here calling razzle dazzle was, in a more simple age, called the occult. Umberto Eco demonstrates in his fascinating exploration of the occult, Foucault’s Pendulum, that the nature of hidden knowledge is to make sure other people know you have hidden knowledge. In other words, having a secret is no good if people don’t know you have it. Dr. Strangelove expressed it best in Stanley Kubrick’s classic film:

Of course, the whole point of a Doomsday Machine is lost if you keep it a secret!

A secret, however, loses its power if it is ever revealed. This has always been the difficulty of maintaining mystery series like The X-Files and Lost. An audience is put off if all you ever do is constantly tease them without telling them what’s really going on.

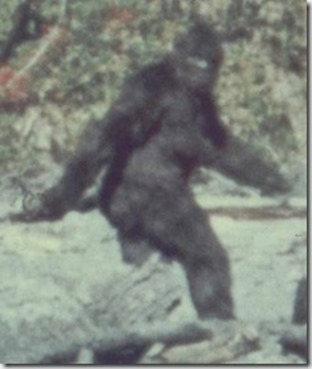

By the same token, the reveal is always a bit of a letdown. Capturing bigfoot and finding out that it is some sort of hairy hominid would be terribly disappointing. Catching the Loch Ness Monster – even discovering that it is in fact a plesiosaur that survived the extinction of the dinosaurs – would be deflating compared to the sweetness of having it exist as a pure potential we don’t even believe in.

This letdown even applies to the future and new technologies. New technologies are like bigfoot in the way they disappoint when we finally get our hands on them. The initial excitement is always short-lived and is followed by a peculiar depression. Such was the case in an infamous blog post by Scott Hanselman called Leap Motion Amazing, Revolutionary, Useless – but known informally as his Dis-kinect post – which is an odd and ambivalent blend of snarky and sympathetic. Or perhaps snarky and sympathetic is simply our constant stance regarding the always impending future.

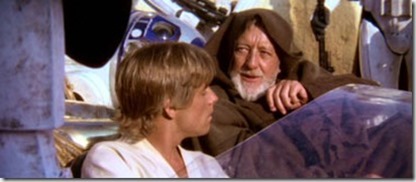

The classic bad reveal – the one that traumatized millions of idealistic would-be Jedi – is the quasi-scientific explanation of midichlorians in The Phantom Menace. The offences are many – not least because the mystery of the force is simply shifted to magic bacteria that pervade the universe and live inside sentient beings – an explanation that explains nothing but does allow the force to be quantified in a midichlorian count.

The midichlorian plot device highlights an important point. Explanations, revelations and unmaskings do not always make things easier to understand, especially when it’s something like the force that, in some sense, is already understood intuitively. Every child already knows that by being good, one ultimately gets what one wants and gets along with others. This is essentially the lesson of that ancient Jedi religion – by following the tenets of the Jedi, one is able to move distant objects with one’s will, influence people, and be one with the universe. An over-analysis of this premise of childhood virtue destroys rather than enlightens.

The force, like virtue itself, is a kind of razzle dazzle – by obfuscating it also brings something into existence – it creates a secret. In attempts to explain the potential of the Kinect sensor, people often resort to images of Tom Cruise at the Desk of the Future or Picard on the holodeck. The true emotional connection, however, is with that earlier (and adolescent) fantasy awakened by A New Hope of moving things by simply wanting them to move, or changing someone’s mind with a wave of the hand and a few words – these are not the droids you are looking for. Ben Kenobi’s trick in turn has its primordial source in the infant’s crying and waving of the arms as a way to magically make food appear.

It’s not coincidental, after all, that Kinect sensors have always had both a depth sensor to track hand movements as well as a virtual microphone array to detect speech.