We all want to have an easy way to compare different depth cameras to one another. Where we often stumble in comparing depth cameras, however, is in making the mistake of thinking of them in the same way we think of color cameras or color displays.

When we go to buy a color television or computer monitor, for instance, we look to the pixel density in order to determine the best value. A display that supports 1920 by 1080 has roughly 2.5 times the pixel density of a 1280 by 720 display. The first is considered high definition resolution while the second is commonly thought of as standard definition. From this, we have a rule of thumb that HD is 2.5 times denser than SD. With digital cameras, we similarly look to pixel density in order to compare value. A 4 megapixel camera is roughly twice as good as a 2 megapixel camera, while an 8 MP camera is four times as good. There are always other factors involved, but for quick evaluations the pixel density trick seems to work. My phone happens to have a 41 MP camera and I don’t know what to do with all those extra megapixels – all I know is that it is over 20 times as good as that 2 megapixel camera I used to have and that makes me happy.

When Microsoft’s Kinect 2 sensor came out, it was tempting to compare it against the Kinect v1 in a similar way: by using pixel density. The Kinect v1 depth camera had a resolution of 320 by 240 depth pixels. The Kinect 2 depth camera, on the other hand, had an increased resolution of 512 b 424 depth pixels. Comparing the total depth pixels provided by the Kinect v1 to the total provided by the Kinect 2: 76,800 vs 2, 217,088, many people arrived at the conclusion that the Kinect 2’s depth cameras was roughly three times better than the Kinect v1’s.

Another feature of the Kinect 2 is a greater field of view for the depth camera. Where the Kinect v1 has a field of view of 57 degrees by 43 degrees, the Kinect 2 has a 70 by 60 degree field of view. The new Intel RealSense 3D F200 camera, in turn, advertises an improved depth resolution of 480 by 360 degrees with an increased field of view of roughly 90 degrees by 72 degrees.

What often gets lost in these feature comparisons is that our two different depth camera attributes, resolution and field of view, can actually affect each other. Increased pixel resolution is only really meaningful if the field of view stays the same between different cameras. If we increase the field of view, however, we are in effect diluting the resolution of each pixel by trying to stuff more of the real world into the pixels we already have.

It turns out that 3D math works slightly differently from regular 2D math. To understand this better, imagine a sheet of cardboard held a meter out in front of each of our two Kinect sensors. How much of each sheet is actually caught by the Kinect v1 and the Kinect 2?

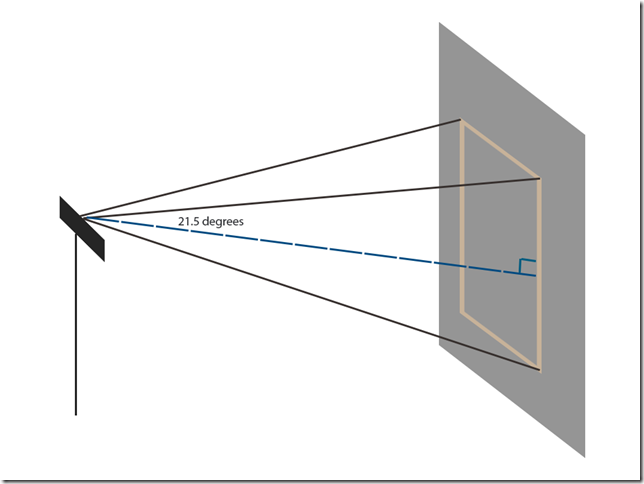

To derive the area of the inner rectangle captured by the Kinect v1 in the diagram above, we will use a bit of trigonometry. The field of view of the Kinect v1 is 58.5 degrees horizontal by 46.6 vertical. To get good angles to work with, however, we will need to bisect these angles. For instance, half of 46.6 is 23.3. The tangent of 21.5 degrees times the 1 meter hypotenuse (since the cardboard sheet is 1 M away) gives us an opposite side of .39 meters. Since this is only half of that rectangle’s side (because we bisected the angle) we multiply by two to get the full vertical side which is .78 meters. Using the same technique for the horizontal field of view, we capture a horizontal side of 1.09 meters.

Using the same method for the sheet of cardboard in front of the Kinect 2, we discover that the Kinect 2 captures a rectangular surface that is 1.4 meters by 1.14 meters. If we now calculate the area on the cardboard sheets in front of each camera and divide by each camera’s resolution, we discover that far from being three times better than the Kinect v1, each pixel caught by the Kinect 2 depth camera holds 1.5 times as much of the real world as each pixel of the Kinect v1. It is still a better camera, but not what one would think by comparing resolutions alone.

This was actually a lot of math in order to make a simple and mundane point: it all depends. Depth pixel resolutions do not tell us everything we need to know when comparing different depth cameras. I invite the reader to compare the true density of the RealSense 3D camera to the Kinect 2 or Xtion Pro Live camera if she would like.

On the other hand, it might be worth considering the range of these different cameras. The RealSense F200 cuts off at about a meter whereas the Kinect cameras only start performing really well at about that distance. Another factor is, of course, the accuracy of the depth information each camera provides. A third factor is whether one can improve the performance of a camera by throwing on more hardware. Because the Kinect 2 is GPU bound, it will actually work better if you simply add a better graphics card.

For me, personally, the most important question will always be how good the SDK is and how strong the community around the device is. With good language and community support, even a low quality depth camera can be made to do amazing things. An extremely high resolution depth camera with a weak SDK, alternatively, might in turn make a better paperweight than a feature forward technology solution.

[I’d like to express my gratitude to Kinect for Windows MVPs Matteo Valoriani and Vincent Guigui for introducing me to this geometric bagatelle.]