Microsoft has just published the next release of the Kinect SDK: http://www.microsoft.com/en-us/kinectforwindows/develop/developer-downloads.aspx Be sure to install both the SDK and the Toolkit.

This release is backwards compatible with the 1.0 release of the SDK. This is important, because it means that you will not have to recompile applications you have already written with the Kinect SDK 1.0. They will continue to work as is. Even better, you can install 1.5 right over 1.0 – the install we take care of everything and you don’t have to go through the messy process of tracking down and removing all the components of the previous install.

I do recommend upgrading your applications to 1.5 if you are able, however. There are improvements to tracking as well as the depth and color data.

Additionally, several things developers asked for following the initial release have been added. Near-mode, which allows the sensor to work as close as 40cm, now also supports skeleton tracking (previously it did not).

Partial Skeleton Tracking is now also supported. While full body tracking made sense for XBox games, it made less sense when people were sitting in front of their computer or even simply in a crowded room. With the 1.5 SDK, applications can be configured to ignore everything below the waist and just track the top ten skeleton joints. This is also known as seated skeleton tracking.

Kinect Studio has been added to the toolkit. If you have been working with the Kinect on a regular basis, you have probably developed several workplace traumas never dreamed of by OSHA as you tested your applications by gesticulating wildly in the middle of your co-workers. Kinect Studio allows you to record color, depth and skeleton data from an application and save it off. Later, after making necessary tweaks to your app, you can simply play it back. Best of all, the channel between your app and Kinect Studio is transparent. You do not have to implement any special code in your application to get record and play-back to work. They just do! Currently Kinect Studio does not record voice – but we’ll see what happens in the future.

Besides partial skeleton tracking, skeleton tracking now also provides rotation information. A big complaint with the initial SDK release was that there was no way to find out if a player/user is turning his head. Now you can – along with lots of other tossing and turning: think Kinect Twister.

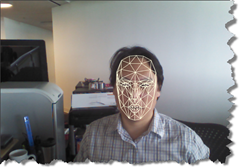

Those are things developers asked for. In the SDK 1.5 release, however, we also get several things no one was expecting. The Face Tracking Library (part of the toolkit) allows devs to track 87 distinct points on the face. Additional data is provided indication the location of the eyes, the vertices of a square around a player’s face (I used to jump through hoops with OpenCV to do this), as well as face gesture scalars that tell you things like whether the lower lip is curved upwards or downwards (and consequently whether a player is smiling or frowning). Unlike libraries such as OpenCV (in case you were wondering), the face tracking library is using rgb as well as depth and skeleton data to perform its analysis.

The other cool thing we get this go-around is a sample application called Avateering that demonstrates how to use the Kinect SDK 1.5 to animate a 3D Model generated by tools like Maya or Blender. The obvious way to use this, though, would be in common motion capture scenarios. Jasper Brekelmans has taken this pretty far already with OpenNI and there have been several cool samples published on the web using the K4W SDK (you’ll notice that everyone reuses the same model and basic XNA code). The 1.5 Toolkit sample takes this even further by, first, having smoother tracking and, second, by adding joint rotation to the mocap animation. The code is complex and depends a lot on the way the model is generated. It’s a great starting point, though, and is just crying out for someone to modify it in order to re-implement the Shape Game from v1.0 of the SDK.

The Kinect4Windows team has shown that it can be fast and furious as it continues to build on the momentum of the initial release.

There are some things I am still waiting for the community (rather than K4W) to build, however. One is a common way to work with point clouds. KinectFusion has already demonstrated the amazing things that can be done with point clouds and the Kinect. It’s the sort of technical biz-wang that all our tomorrows will be constructed from. Currently PCL has done some integration with certain versions of OpenNI (the versioning issues just kill me). Here’s hoping PC will do something with the SDK soon.

The second major stumbling block is a good gesture library – ideally one built on computer learning. GesturePak is a good start though I have my doubts about using a pose approach to gesture recognition as a general purpose solution. It’s still worth checking out while we wait for a better solution, however.

In my ideal world, a common gesture idiom for the Kinect and other devices would be the responsibility of some of our best UX designers in the agency world. Maybe we could even call them a consortium! Once the gestures are hammered out, they would be passed on to engineers who would use computer learning to create decision trees for recognizing these gestures much as the original skeleton tracking for Kinect was done. Then we would put devices out in the world and they would stream data to people’s Google glasses and … but I’m getting ahead of myself. Maybe all that will be ready when the Kinect 2.5 SDK is released. In the meantime, I still have lots to chew on with this release.